Statistical tests

Last updated on 2026-02-03 | Edit this page

Overview

Questions

- How do I run X statistical test?

Objectives

- Provide a description on how to run common statistical tests

A collection of statistical tests

For all tests, the approach is:

- Formulate hypotheses

- Calculate test statistic

- Determine p-value

- Make decision

The cutoff chosen for all tests is a p-value of 0.05 unless otherwise indicated.

If a specific test is prefaced with “EJ KORREKTURLÆST” the text, examples etc have not been checked.

One sample tests

Or, more complete - one-sample chi-square goodnes-of-fit test.

- Used for: Testing whether observed categorical frequencies differ from expected frequencies under a specified distribution.

- Real-world example: Mars Inc. claims a specific distribution of colours in their M&M bags. Do the observed proportions in a given bag match their claim?

Assumptions

- Observations are independent.

- Categories are mutually exclusive and collectively exhaustive.

- Expected count in each category is at least 5 (for the chi-square approximation to be valid). The observed counts can be smaller, it is the expected counts that are important.

Strengths

- Simple to compute and interpret.

- Does not require the data to be normally distributed.

- Applicable to any number of categories.

Weaknesses

- Sensitive to small expected counts.

- Does not indicate which categories contribute most to the discrepancy without further investigation.

- Requires independence; cannot be used for paired or repeated measures.

Example

-

Null hypothesis (H₀): The proportions of M&M

colours equal the manufacturer’s claimed distribution.

- Alternative hypothesis (H₁): The proportion of at least one colour differs from the claimed distribution.

R

# Observed counts of M&M colors:

observed <- c(red = 20, blue = 25, green = 15, brown = 18, orange = 12, yellow = 10)

# Manufacturer's claimed proportions.

p_expected <- c(red = 0.12, blue = 0.24, green = 0.16, brown = 0.14, orange = 0.20, yellow = 0.14)

# Perform one-sample chi-square goodness-of-fit test:

test_result <- chisq.test(x = observed, p = p_expected)

# Display results:

test_result

OUTPUT

Chi-squared test for given probabilities

data: observed

X-squared = 10.923, df = 5, p-value = 0.05292Interpretation: The test yields χ² = 10.9 with a p-value = 0.05292. We fail to reject the null hypothesis, and there is no evidence to conclude a difference from the claimed distribution.

Note that in this example, the number of observations sums to 100.

chisq.test() normalises the expected values, so we do not

have to match the numbers.

-

Used for: Testing whether the mean of a single

sample differs from a known population mean when the population standard

deviation is known.

- Real-world example: Checking if the average diameter of manufactured ball bearings equals the specified 5.00 cm when σ is known. It can differ in three ways. It can differ from the specification. That is a Two-sided test. It can be smaller, that is a (left side) One-sided test. And it can be larger, that is a (right side) One-sided test.

Assumptions

- Sample is a simple random sample from the population.

- Observations are independent.

- Population standard deviation (σ) is known.

- The sampling distribution of the mean is approximately normal (either the population is normal or n is large, e.g. ≥ 30).

Strengths

- More powerful than the t-test when σ is truly known.

- Simple calculation and interpretation.

Weaknesses

- The true population σ is only very rarely known in practice.

- Sensitive to departures from normality for small samples.

- Misspecification of σ leads to incorrect inferences.

Example

Two-sided:

-

Null hypothesis (H₀): The true mean diameter μ =

5.00 cm.

- Alternative hypothesis (H₁): μ ≠ 5.00 cm.

One-sided, left:

-

Null hypothesis (H₀): The true mean diameter μ =

5.00 cm.

- Alternative hypothesis (H₁): μ < 5.00 cm.

One-sided, right:

-

Null hypothesis (H₀): The true mean diameter μ =

5.00 cm.

- Alternative hypothesis (H₁): μ > 5.00 cm.

R

# Sample of diameters (cm) for 25 ball bearings:

diameters <- c(5.03, 4.97, 5.01, 5.05, 4.99, 5.02, 5.00, 5.04, 4.96, 5.00,

5.01, 4.98, 5.02, 5.03, 4.94, 5.00, 5.02, 4.99, 5.01, 5.03,

4.98, 5.00, 5.04, 4.97, 5.02)

# Known population standard deviation:

sigma <- 0.05

# Hypothesized mean:

mu0 <- 5.00

# Compute test statistic:

n <- length(diameters)

xbar <- mean(diameters)

z_stat <- (xbar - mu0) / (sigma / sqrt(n))

# Two-sided p-value:

two_sided_p_value <- 2 * (1 - pnorm(abs(z_stat)))

# Larger p-value

p_value_larger_mu <- 1- pnorm(z_stat)

# Smaller p-value

p_value_smaller_mu <- pnorm(z_stat)

# Output results:

z_stat; two_sided_p_value; p_value_larger_mu; p_value_smaller_mu

OUTPUT

[1] 0.44OUTPUT

[1] 0.6599371OUTPUT

[1] 0.3299686OUTPUT

[1] 0.6700314Interpretation:

The sample mean is 5.004 cm. The z-statistic is 0.44 with a two-sided p-value of 0.66. We fail to reject the null hypothesis. Thus, there is no evidence to conclude a difference from the specified diameter of 5.00 cm.

We can similarly reject the hypothesis that the average diameter is larger (p = 0.3299686) or that it is smaller (p = 0.6700314)

EJ KORREKTURLÆST!

Her kan vi nok med fordel bruge samme eksempel som i z-testen.

-

Used for: Testing whether the mean of a single

sample differs from a known or hypothesized population mean when the

population standard deviation is unknown.

- Real-world example: Determining if the average exam score of a class differs from the passing threshold of 70%.

Assumptions:

- Sample is a simple random sample from the population.

- Observations are independent.

- The data are approximately normally distributed (especially important for small samples; n ≥ 30 reduces sensitivity).

Strengths: * Does not require knowing the population

standard deviation.

* Robust to mild departures from normality for moderate-to-large sample

sizes.

* Widely applicable and easily implemented.

Weaknesses * Sensitive to outliers in small

samples.

* Performance degrades if normality assumption is seriously violated and

n is small.

* Degrees of freedom reduce power relative to z-test.

Example

-

Null hypothesis (H₀): The true mean exam score μ =

70.

- Alternative hypothesis (H₁): μ ≠ 70.

R

# Sample of exam scores for 20 students:

scores <- c(68, 74, 71, 69, 73, 65, 77, 72, 70, 66,

75, 68, 71, 69, 74, 67, 72, 70, 73, 68)

# Hypothesized mean:

mu0 <- 70

# Perform one-sample t-test:

test_result <- t.test(x = scores, mu = mu0)

# Display results:

test_result

OUTPUT

One Sample t-test

data: scores

t = 0.84675, df = 19, p-value = 0.4077

alternative hypothesis: true mean is not equal to 70

95 percent confidence interval:

69.1169 72.0831

sample estimates:

mean of x

70.6 Interpretation:

The sample mean is 70.6. The t-statistic is 0.85 with 19 degrees of freedom and a two-sided p-value of 0.408. We fail to reject the null hypothesis. Thus, there is no evidence to conclude the average score differs from the passing threshold of 70.

EJ KORREKTURLÆST!

-

Used for Testing whether the observed count of

events in a fixed period differs from a hypothesized Poisson rate.

- Real-world example: Checking if the number of customer arrivals per hour at a call center matches the expected rate of 30 calls/hour.

Assumptions * Events occur independently.

* The rate of occurrence (λ) is constant over the observation

period.

* The count of events in non-overlapping intervals is independent.

Strengths * Exact test based on the Poisson

distribution (no large-sample approximation needed).

* Valid for small counts and rare events.

* Simple to implement in R via poisson.test().

Weaknesses * Sensitive to violations of the Poisson

assumptions (e.g., overdispersion or time-varying rate).

* Only assesses the overall rate, not the dispersion or clustering of

events.

* Cannot handle covariates or more complex rate structures.

Example

-

Null hypothesis (H₀): The event rate λ = 30

calls/hour.

- Alternative hypothesis (H₁): λ ≠ 30 calls/hour.

R

# Observed number of calls in one hour:

observed_calls <- 36

# Hypothesized rate (calls per hour):

lambda0 <- 30

# Perform one-sample Poisson test (two-sided):

test_result <- poisson.test(x = observed_calls, T = 1, r = lambda0, alternative = "two.sided")

# Display results:

test_result

OUTPUT

Exact Poisson test

data: observed_calls time base: 1

number of events = 36, time base = 1, p-value = 0.272

alternative hypothesis: true event rate is not equal to 30

95 percent confidence interval:

25.21396 49.83917

sample estimates:

event rate

36 Interpretation:

The test reports an observed count of 36 calls versus an expected 30 calls, yielding a p-value of 0.272. We fail to reject the null hypothesis. Thus, there is no evidence to conclude the call rate differs from 30 calls/hour.

EJ KORREKTURLÆST. DEN HAR VI NOGET UNDERVISNINGSMATERIALE OM I “ER DET NORMALT?”

-

Used for Testing whether a sample comes from a

normally distributed population.

- Real-world example: Checking if the distribution of daily blood glucose measurements in a patient cohort is approximately normal.

Assumptions * Observations are independent.

* Data are continuous.

* No extreme ties or many identical values.

Strengths * Good power for detecting departures from

normality in small to moderate samples (n ≤ 50).

* Widely implemented and easy to run in R.

* Provides both a test statistic (W) and p-value.

Weaknesses * Very sensitive to even slight

deviations from normality in large samples (n > 2000).

* Does not indicate the nature of the departure (e.g., skewness

vs. kurtosis).

* Ties or repeated values can invalidate the test.

Example

-

Null hypothesis (H₀): The sample is drawn from a

normal distribution.

- Alternative hypothesis (H₁): The sample is not drawn from a normal distribution.

R

# Simulate a sample of 30 observations from a normal distribution:

set.seed(123)

sample_data <- rnorm(30, mean = 100, sd = 15)

# Perform Shapiro–Wilk test:

sw_result <- shapiro.test(sample_data)

# Display results:

sw_result

OUTPUT

Shapiro-Wilk normality test

data: sample_data

W = 0.97894, p-value = 0.7966Interpretation:

The Shapiro–Wilk statistic W = 0.979 with p-value = 0.797. We fail to reject the null hypothesis. Thus, there is no evidence to conclude a departure from normality.

EJ KORREKTURLÆST. DENNE HAR VI OGSÅ UNDERVISNINGSMATERIALE OM I “ER DET NORMALT”

-

Used for Testing whether a sample comes from a

specified continuous distribution.

- Real-world example: Checking if patient systolic blood pressures follow a normal distribution with mean 120 mmHg and SD 15 mmHg.

Assumptions * Observations are independent.

* Data are continuous (no ties).

* The null distribution is fully specified (parameters known, not

estimated from the data).

Strengths * Nonparametric: makes no assumption about

distribution shape beyond continuity.

* Sensitive to any kind of departure (location, scale, shape).

* Exact distribution of the test statistic under H₀.

Weaknesses * Requires that distribution parameters

(e.g., mean, SD) are known a priori; if estimated from data, p-values

are invalid.

* Less powerful than parametric tests when the parametric form is

correct.

* Sensitive to ties and discrete data.

Example

-

Null hypothesis (H₀): The blood pressure values

follow a Normal(μ = 120, σ = 15) distribution.

- Alternative hypothesis (H₁): The blood pressure values do not follow Normal(120, 15).

R

set.seed(2025)

# Simulate systolic blood pressure for 40 patients:

sample_bp <- rnorm(40, mean = 120, sd = 15)

# Perform one-sample Kolmogorov–Smirnov test against N(120,15):

ks_result <- ks.test(sample_bp, "pnorm", mean = 120, sd = 15)

# Show results:

ks_result

OUTPUT

Exact one-sample Kolmogorov-Smirnov test

data: sample_bp

D = 0.11189, p-value = 0.6573

alternative hypothesis: two-sidedInterpretation:

The KS statistic D = 0.112 with p-value = 0.657. We fail to reject the null hypothesis. Thus, there is no evidence to conclude deviation from Normal(120,15).

EJ KORREKTURLÆST

-

Used for Testing whether observed categorical

frequencies differ from expected categorical proportions.

- Real-world example: Comparing the distribution of blood types in a sample of donors to known population proportions.

Assumptions * Observations are independent.

* Categories are mutually exclusive and exhaustive.

* Expected count in each category is at least 5 for the chi-square

approximation to hold.

Strengths * Simple to compute and interpret.

* Nonparametric: no requirement of normality.

* Flexible for any number of categories.

Weaknesses * Sensitive to small expected counts

(invalidates approximation).

* Doesn’t identify which categories drive the discrepancy without

further post-hoc tests.

* Requires independence—unsuitable for paired or repeated measures.

Example

-

Null hypothesis (H₀): The sample blood type

proportions equal the known population proportions (A=0.42, B=0.10,

AB=0.04, O=0.44).

- Alternative hypothesis (H₁): At least one blood type proportion differs from its known value.

R

# Observed counts of blood types in 200 donors:

observed <- c(A = 85, B = 18, AB = 6, O = 91)

# Known population proportions:

p_expected <- c(A = 0.42, B = 0.10, AB = 0.04, O = 0.44)

# Perform chi-square goodness-of-fit test:

test_result <- chisq.test(x = observed, p = p_expected)

# Display results:

test_result

OUTPUT

Chi-squared test for given probabilities

data: observed

X-squared = 0.81418, df = 3, p-value = 0.8461Interpretation:

The test yields χ² = 0.81 with p-value = 0.846. We fail to reject the null hypothesis. Thus, there is no evidence to conclude the sample proportions differ from the population.

To-prøve-tests og parrede tests

EJ KORREKTURLÆST

We use this when we want to determine if two independent samples originate from populations with the same variance.

-

Used for Testing whether two independent samples

have equal variances.

- Real-world example: Comparing the variability in systolic blood pressure measurements between two clinics.

Assumptions * Both samples consist of independent

observations.

* Each sample is drawn from a normally distributed population.

* Samples are independent of one another.

Strengths * Simple calculation and

interpretation.

* Directly targets variance equality, a key assumption in many

downstream tests (e.g., t-test).

* Exact inference under normality.

Weaknesses * Highly sensitive to departures from

normality.

* Only compares variance—doesn’t assess other distributional

differences.

* Not robust to outliers.

Example

-

Null hypothesis (H₀): σ₁² = σ₂² (the two population

variances are equal).

- Alternative hypothesis (H₁): σ₁² ≠ σ₂² (the variances differ).

R

# Simulate systolic BP (mmHg) from two clinics:

set.seed(2025)

clinicA <- rnorm(30, mean = 120, sd = 8)

clinicB <- rnorm(25, mean = 118, sd = 12)

# Perform two-sample F-test for variances:

f_result <- var.test(clinicA, clinicB, alternative = "two.sided")

# Display results:

f_result

OUTPUT

F test to compare two variances

data: clinicA and clinicB

F = 0.41748, num df = 29, denom df = 24, p-value = 0.02606

alternative hypothesis: true ratio of variances is not equal to 1

95 percent confidence interval:

0.1882726 0.8992623

sample estimates:

ratio of variances

0.4174837 Interpretation

The F statistic is 0.417 with numerator df = 29 and denominator df = 24, and p-value = 0.0261. We

reject the null hypothesis. Thus, there is evidence that the variability in blood pressure differs between the two clinics.

EJ KORREKTURLÆST

-

Used for Testing whether the mean difference

between two related (paired) samples differs from zero.

- Real-world example: Comparing patients’ blood pressure before and after administering a new medication.

Assumptions * Paired observations are independent of

other pairs.

* Differences between pairs are approximately normally

distributed.

* The scale of measurement is continuous (interval or ratio).

Strengths * Controls for between‐subject variability

by using each subject as their own control.

* More powerful than unpaired tests when pairs are truly

dependent.

* Easy to implement and interpret.

Weaknesses * Sensitive to outliers in the difference

scores.

* Requires that differences be approximately normal, especially for

small samples.

* Not appropriate if pairing is not justified or if missing data break

pairs.

Example

-

Null hypothesis (H₀): The mean difference Δ = 0 (no

change in blood pressure).

- Alternative hypothesis (H₁): Δ ≠ 0 (blood pressure changes after medication).

R

# Simulated systolic blood pressure (mmHg) for 15 patients before and after treatment:

before <- c(142, 138, 150, 145, 133, 140, 147, 139, 141, 136, 144, 137, 148, 142, 139)

after <- c(135, 132, 144, 138, 128, 135, 142, 133, 136, 130, 139, 132, 143, 137, 133)

# Perform paired t-test:

test_result <- t.test(before, after, paired = TRUE)

# Display results:

test_result

OUTPUT

Paired t-test

data: before and after

t = 29.437, df = 14, p-value = 5.418e-14

alternative hypothesis: true mean difference is not equal to 0

95 percent confidence interval:

5.19198 6.00802

sample estimates:

mean difference

5.6 Interpretation:

The mean difference (before – after) is 5.6 mmHg. The t‐statistic is

29.44 with 14 degrees of freedom and a two‐sided p‐value

ofr signif(test_result$p.value, 3). We reject the null

hypothesis. Thus, there is evidence that the medication significantly

changed blood pressure.

EJ KORREKTURLÆST

- Used for Testing whether the means of two independent samples differ, assuming equal variances.

- Real-world example: Comparing average systolic blood pressure between male and female patients when variability is similar.

Assumptions * Observations in each group are

independent.

* Both populations are normally distributed (especially important for

small samples).

* The two populations have equal variances (homoscedasticity).

Strengths * More powerful than Welch’s t-test when

variances truly are equal.

* Simple computation and interpretation via pooled variance.

* Widely implemented and familiar to practitioners.

Weaknesses * Sensitive to violations of normality in

small samples.

* Incorrect if variances differ substantially—can inflate Type I

error.

* Assumes homogeneity of variance, which may not hold in practice.

Example

-

Null hypothesis (H₀): μ₁ = μ₂ (the two population

means are equal).

- Alternative hypothesis (H₁): μ₁ ≠ μ₂ (the means differ).

R

set.seed(2025)

# Simulate systolic BP (mmHg):

groupA <- rnorm(25, mean = 122, sd = 10) # e.g., males

groupB <- rnorm(25, mean = 118, sd = 10) # e.g., females

# Perform two-sample t-test with equal variances:

test_result <- t.test(groupA, groupB, var.equal = TRUE)

# Display results:

test_result

OUTPUT

Two Sample t-test

data: groupA and groupB

t = 2.6245, df = 48, p-value = 0.0116

alternative hypothesis: true difference in means is not equal to 0

95 percent confidence interval:

1.733091 13.086377

sample estimates:

mean of x mean of y

125.2119 117.8021 Interpretation:

The pooled estimate of the difference in means is 7.41 mmHg. The t-statistic is 2.62 with df = 48 and p-value = 0.0116. We reject the null hypothesis. Thus, there is evidence that the average systolic blood pressure differs between the two groups.

EJ KORREKTURLÆST

Used for Testing whether the means of two

independent samples differ when variances are unequal.

* Real-world example: Comparing average recovery times

for two different therapies when one therapy shows more variable

outcomes.

Assumptions * Observations in each group are

independent.

* Each population is approximately normally distributed (especially

important for small samples).

* Does not assume equal variances across groups.

Strengths * Controls Type I error when variances

differ.

* More reliable than the pooled‐variance t‐test under

heteroskedasticity.

* Simple to implement via t.test(..., var.equal = FALSE) in

R.

Weaknesses * Slight loss of power compared to

equal-variance t‐test when variances truly are equal.

* Sensitive to departures from normality in small samples.

* Degrees of freedom are approximated (Welch–Satterthwaite), which can

reduce interpretability.

Example

-

Null hypothesis (H₀): μ₁ = μ₂ (the two population

means are equal).

- Alternative hypothesis (H₁): μ₁ ≠ μ₂ (the means differ).

R

set.seed(2025)

# Simulate recovery times (days) for two therapies:

therapyA <- rnorm(20, mean = 10, sd = 2) # Therapy A

therapyB <- rnorm(25, mean = 12, sd = 4) # Therapy B (more variable)

# Perform two-sample t-test with unequal variances:

test_result <- t.test(therapyA, therapyB, var.equal = FALSE)

# Display results:

test_result

OUTPUT

Welch Two Sample t-test

data: therapyA and therapyB

t = -1.7792, df = 32.794, p-value = 0.08449

alternative hypothesis: true difference in means is not equal to 0

95 percent confidence interval:

-3.8148079 0.2558875

sample estimates:

mean of x mean of y

10.41320 12.19266 Interpretation:

The estimated difference in means is -1.78 days. The Welch

t‐statistic isr round(test_result$statistic, 2) with df ≈

32.8 and two‐sided p‐value

=r signif(test_result$p.value, 3). We fail to reject the

null hypothesis. Thus, there is no evidence of a difference in average

recovery times..

EJ KORREKTURLÆST

-

Used for Comparing the central tendencies of two

independent samples when the data are ordinal or not normally

distributed.

- Real-world example: Testing whether pain scores (0–10) differ between patients receiving Drug A versus Drug B when scores are skewed.

Assumptions * Observations are independent both

within and between groups.

* The response variable is at least ordinal.

* The two distributions have the same shape (so that differences reflect

location shifts).

Strengths * Nonparametric: does not require

normality or equal variances.

* Robust to outliers and skewed data.

* Simple rank-based calculation.

Weaknesses * Less powerful than t-test when data are

truly normal.

* If distributions differ in shape as well as location, interpretation

becomes ambiguous.

* Only tests for location shift, not differences in dispersion.

Example

-

Null hypothesis (H₀): The distributions of pain

scores are identical for Drug A and Drug B.

- Alternative hypothesis (H₁): The distributions differ in location (median pain differs between drugs).

R

# Simulate pain scores (0–10) for two independent groups:

set.seed(2025)

drugA <- c(2,3,4,5,4,3,2,6,5,4, # skewed lower

3,4,5,4,3,2,3,4,5,3)

drugB <- c(4,5,6,7,6,5,7,8,6,7, # skewed higher

6,7,5,6,7,6,8,7,6,7)

# Perform Mann–Whitney U test (Wilcoxon rank-sum):

mw_result <- wilcox.test(drugA, drugB, alternative = "two.sided", exact = FALSE)

# Display results:

mw_result

OUTPUT

Wilcoxon rank sum test with continuity correction

data: drugA and drugB

W = 20.5, p-value = 9.123e-07

alternative hypothesis: true location shift is not equal to 0Interpretation:

The Wilcoxon rank-sum statistic W = 20.5 with p-value = 9.12^{-7}. We reject the null hypothesis. Thus, there is evidence that median pain scores differ between Drug A and Drug B.

EJ KORREKTURLÆST

-

Used for Testing whether the median difference

between paired observations is zero.

- Real-world example: Comparing patients’ pain scores before and after a new analgesic treatment when differences may not be normally distributed.

Assumptions * Observations are paired and the pairs

are independent.

* Differences are at least ordinal and symmetrically distributed around

the median.

* No large number of exact zero differences (ties).

Strengths * Nonparametric: does not require

normality of differences.

* Controls for within‐subject variability by using paired design.

* Robust to outliers in the paired differences.

Weaknesses * Less powerful than the paired t-test

when differences are truly normal.

* Requires symmetry of the distribution of differences.

* Cannot easily handle many tied differences.

Example

-

Null hypothesis (H₀): The median difference in pain

score (before – after) = 0 (no change).

- Alternative hypothesis (H₁): The median difference ≠ 0 (pain changes after treatment).

R

# Simulated pain scores (0–10) for 12 patients:

before <- c(6, 7, 5, 8, 6, 7, 9, 5, 6, 8, 7, 6)

after <- c(4, 6, 5, 7, 5, 6, 8, 4, 5, 7, 6, 5)

# Perform Wilcoxon signed-rank test:

wsr_result <- wilcox.test(before, after, paired = TRUE,

alternative = "two.sided", exact = FALSE)

# Display results:

wsr_result

OUTPUT

Wilcoxon signed rank test with continuity correction

data: before and after

V = 66, p-value = 0.001586

alternative hypothesis: true location shift is not equal to 0Interpretation:

The Wilcoxon signed‐rank test statistic V =66 with p-value

=r signif(wsr_result$p.value, 3). We reject the null

hypothesis. Thus, there is evidence that median pain scores change after

treatment..

EJ KORREKTURLÆST

-

Used for Testing whether two independent samples

come from the same continuous distribution.

- Real-world example: Comparing the distribution of recovery times for patients receiving Drug A versus Drug B.

Assumptions * Observations in each sample are

independent.

* Data are continuous with no ties.

* The two samples are drawn from fully specified continuous

distributions (no parameters estimated from the same data).

Strengths * Nonparametric: makes no assumption about

the shape of the distribution.

* Sensitive to differences in location, scale, or overall shape.

* Exact distribution under the null when samples are not too large.

Weaknesses * Less powerful than parametric

alternatives if the true form is known (e.g., t-test for normal

data).

* Invalid p-values if there are ties or discrete data.

* Does not indicate how distributions differ—only that they do.

Example

-

Null hypothesis (H₀): The two samples come from the

same distribution.

- Alternative hypothesis (H₁): The two samples come from different distributions.

R

# Simulate recovery times (days) for two therapies:

set.seed(2025)

therapyA <- rnorm(30, mean = 10, sd = 2) # Therapy A

therapyB <- rnorm(30, mean = 12, sd = 3) # Therapy B

# Perform two-sample Kolmogorov–Smirnov test:

ks_result <- ks.test(therapyA, therapyB, alternative = "two.sided", exact = FALSE)

# Display results:

ks_result

OUTPUT

Asymptotic two-sample Kolmogorov-Smirnov test

data: therapyA and therapyB

D = 0.43333, p-value = 0.007153

alternative hypothesis: two-sidedInterpretation:

The KS statistic D = 0.433 with p-value = 0.00715. We reject the null hypothesis. Thus, there is evidence that the distribution of recovery times differs between therapies.

EJ KORREKTURLÆST

-

Used for Testing whether multiple groups have equal

variances.

- Real-world example: Checking if the variability in patient blood pressure differs between three different clinics.

Assumptions

- Observations are independent.

- The underlying distributions within each group are approximately symmetric (Levene’s test is robust to non-normality but assumes no extreme skew).

Strengths * More robust to departures from normality

than Bartlett’s test.

* Applicable to two or more groups.

* Simple to implement and interpret.

Weaknesses * Less powerful than tests that assume

normality when data truly are normal.

* Can be sensitive to extreme outliers despite its robustness.

* Does not indicate which groups differ in variance without follow-up

comparisons.

Example

-

Null hypothesis (H₀): All groups have equal

variances (σ₁² = σ₂² = … = σₖ²).

- Alternative hypothesis (H₁): At least one group’s variance differs.

R

# Simulate data for three groups (n = 10 each):

set.seed(123)

group <- factor(rep(c("ClinicA", "ClinicB", "ClinicC"), each = 10))

scores <- c(rnorm(10, mean = 120, sd = 5),

rnorm(10, mean = 120, sd = 8),

rnorm(10, mean = 120, sd = 5))

df <- data.frame(group, scores)

# Perform Levene’s test for homogeneity of variances:

library(car)

OUTPUT

Loading required package: carDataR

levene_result <- leveneTest(scores ~ group, data = df)

# Show results:

levene_result

OUTPUT

Levene's Test for Homogeneity of Variance (center = median)

Df F value Pr(>F)

group 2 0.872 0.4296

27 Interpretation:

Levene’s test yields an F-statistic of 0.87 with a p-value of 0.43. We fail to reject the null hypothesis. This means there is no evidence of differing variances across clinics.

EJ KORREKTURLÆST

-

Used for Testing whether multiple groups have equal

variances under the assumption of normality.

- Real-world example: Checking if the variability in laboratory test results differs across three different laboratories.

Assumptions * Observations within each group are

independent.

* Each group is drawn from a normally distributed population.

* Groups are independent of one another.

Strengths * More powerful than Levene’s test when

normality holds.

* Directly targets equality of variances under the normal model.

* Simple to compute in R via bartlett.test().

Weaknesses * Highly sensitive to departures from

normality—small deviations can inflate Type I error.

* Does not indicate which groups differ without further pairwise

testing.

* Not robust to outliers.

Example

-

Null hypothesis (H₀): All group variances are equal

(σ₁² = σ₂² = σ₃²).

- Alternative hypothesis (H₁): At least one group variance differs.

R

# Simulate data for three laboratories (n = 12 each):

set.seed(456)

lab <- factor(rep(c("LabA", "LabB", "LabC"), each = 12))

values <- c(rnorm(12, mean = 100, sd = 5),

rnorm(12, mean = 100, sd = 8),

rnorm(12, mean = 100, sd = 5))

df <- data.frame(lab, values)

# Perform Bartlett’s test for homogeneity of variances:

bartlett_result <- bartlett.test(values ~ lab, data = df)

# Display results:

bartlett_result

OUTPUT

Bartlett test of homogeneity of variances

data: values by lab

Bartlett's K-squared = 10.387, df = 2, p-value = 0.005552Interpretation:

Bartlett’s K-squared = 10.39 with df = 2 and p-value = 0.00555. We reject the null hypothesis. Thus, there is evidence that at least one laboratory’s variance differs from the others.

Variansanalyse (ANOVA/ANCOVA)

EJ KORREKTURLÆST

SAMMENHOLD MED undervisningsudgaven

-

Used for Testing whether the means of three or more

independent groups differ.

- Real-world example: Comparing average test scores among students taught by three different teaching methods.

Assumptions * Observations are independent.

* Each group’s residuals are approximately normally distributed.

* Homogeneity of variances across groups.

Strengths * Controls Type I error rate when

comparing multiple groups.

* Simple to compute and interpret via F-statistic.

* Foundation for many extensions (e.g., factorial ANOVA, mixed

models).

Weaknesses * Sensitive to heterogeneity of

variances, especially with unequal group sizes.

* Only tells you that at least one mean differs—does not indicate which

groups differ without post-hoc tests.

* Assumes normality; moderately robust for large samples, but small

samples can be problematic.

Example

-

Null hypothesis (H₀): μ₁ = μ₂ = μ₃ (all three group

means are equal).

- Alternative hypothesis (H₁): At least one group mean differs.

R

# Simulate exam scores for three teaching methods (n = 20 each):

set.seed(2025)

method <- factor(rep(c("Lecture", "Online", "Hybrid"), each = 20))

scores <- c(rnorm(20, mean = 75, sd = 8),

rnorm(20, mean = 80, sd = 8),

rnorm(20, mean = 78, sd = 8))

df <- data.frame(method, scores)

# Fit one-way ANOVA:

anova_fit <- aov(scores ~ method, data = df)

# Summarize ANOVA table:

anova_summary <- summary(anova_fit)

anova_summary

OUTPUT

Df Sum Sq Mean Sq F value Pr(>F)

method 2 146 72.79 1.184 0.314

Residuals 57 3505 61.48 Interpretation:

The ANOVA yields F = 1.18 with df₁

=r anova_summary[[1]]["method","Df"] and df₂

=r anova_summary[[1]]["Residuals","Df"], and p-value =

0.314. We fail to reject the null hypothesis. Thus, there is no evidence

of a difference in mean scores among methods.

EJ KORREKTURLÆST

-

Used for Comparing group means on a continuous

outcome while adjusting for one continuous covariate.

- Real-world example: Evaluating whether three different teaching methods lead to different final exam scores after accounting for students’ prior GPA.

Assumptions * Observations are independent.

* The relationship between the covariate and the outcome is linear and

the same across groups (homogeneity of regression slopes).

* Residuals are normally distributed with equal variances across

groups.

* Covariate is measured without error and is independent of group

assignment.

Strengths * Removes variability due to the

covariate, increasing power to detect group differences.

* Controls for confounding by the covariate.

* Simple extension of one-way ANOVA with interpretation familiar to

ANOVA users.

Weaknesses * Sensitive to violation of homogeneity

of regression slopes.

* Mis‐specification of the covariate‐outcome relationship biases

results.

* Requires accurate measurement of the covariate.

* Does not accommodate multiple covariates without extension to

factorial ANCOVA or regression.

Example

-

Null hypothesis (H₀): After adjusting for prior

GPA, the mean final exam scores are equal across the three teaching

methods (μ_Lecture = μ_Online = μ_Hybrid).

- Alternative hypothesis (H₁): At least one adjusted group mean differs.

R

set.seed(2025)

n <- 20

method <- factor(rep(c("Lecture","Online","Hybrid"), each = n))

prior_gpa <- rnorm(3*n, mean = 3.0, sd = 0.3)

# Simulate final exam scores with a covariate effect:

# true intercepts 75, 78, 80; slope = 5 points per GPA unit; noise sd = 5

final_score <- 75 +

ifelse(method=="Online", 3, 0) +

ifelse(method=="Hybrid", 5, 0) +

5 * prior_gpa +

rnorm(3*n, sd = 5)

df <- data.frame(method, prior_gpa, final_score)

# Fit one-way ANCOVA:

ancova_fit <- aov(final_score ~ prior_gpa + method, data = df)

ancova_summary <- summary(ancova_fit)

ancova_summary

OUTPUT

Df Sum Sq Mean Sq F value Pr(>F)

prior_gpa 1 166.5 166.5 5.967 0.01775 *

method 2 296.8 148.4 5.320 0.00767 **

Residuals 56 1562.4 27.9

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Interpretation:

After adjusting for prior GPA, the effect of teaching method yields F

= 5.32 (df₁ =r ancova_summary[[1]]["method","Df"], df₂

=r ancova_summary[[1]]["Residuals","Df"]) with p

=r signif(ancova_summary[[1]]["method","Pr(>F)"], 3). We

reject the null hypothesis. Thus, there is evidence that, controlling

for prior GPA, at least one teaching method leads to a different average

final score..

EJ KORREKTURLÆST

-

Used for Testing whether the means of three or more

independent groups differ when variances are unequal.

- Real-world example: Comparing average systolic blood pressure across three clinics known to have different measurement variability.

Assumptions * Observations are independent.

* Each group’s residuals are approximately normally distributed.

* Does not assume equal variances across groups.

Strengths * Controls Type I error under

heteroskedasticity better than ordinary ANOVA.

* Simple to implement via

oneway.test(..., var.equal = FALSE).

* More powerful than nonparametric alternatives when normality

holds.

Weaknesses * Sensitive to departures from normality,

especially with small sample sizes.

* Does not provide post-hoc comparisons by default; requires additional

tests.

* Still assumes independence and approximate normality within each

group.

Example

-

Null hypothesis (H₀): All group means are equal (μ₁

= μ₂ = μ₃).

- Alternative hypothesis (H₁): At least one group mean differs.

R

set.seed(2025)

# Simulate systolic BP (mmHg) for three clinics with unequal variances:

clinic <- factor(rep(c("A","B","C"), times = c(15, 20, 12)))

bp_values <- c(

rnorm(15, mean = 120, sd = 5),

rnorm(20, mean = 125, sd = 10),

rnorm(12, mean = 118, sd = 7)

)

df <- data.frame(clinic, bp_values)

# Perform Welch’s one-way ANOVA:

welch_result <- oneway.test(bp_values ~ clinic, data = df, var.equal = FALSE)

# Display results:

welch_result

OUTPUT

One-way analysis of means (not assuming equal variances)

data: bp_values and clinic

F = 2.7802, num df = 2.000, denom df = 23.528, p-value = 0.08244Interpretation:

The Welch statistic = 2.78 with df ≈ 2, 23.53, and p-value = 0.0824. We fail to reject the null hypothesis. Thus, there is no evidence of a difference in mean blood pressure among the clinics.

EJ KORREKTURLÆST

-

Used for Testing whether the means of three or more

related (within‐subject) conditions differ.

- Real-world example: Assessing whether students’ reaction times change across three levels of sleep deprivation (0 h, 12 h, 24 h) measured on the same individuals.

Assumptions * Observations (subjects) are

independent.

* The dependent variable is approximately normally distributed in each

condition.

* Sphericity: variances of the pairwise differences

between conditions are equal.

Strengths * Controls for between‐subject variability

by using each subject as their own control.

* More powerful than independent‐groups ANOVA when measures are

correlated.

* Can model complex within‐subject designs (e.g. time × treatment

interactions).

Weaknesses * Sensitive to violations of sphericity

(inflates Type I error).

* Missing data in any condition drops the entire subject (unless using

more advanced mixed‐model methods).

* Interpretation can be complex when there are many levels or

interactions.

Example

-

Null hypothesis (H₀): The mean reaction time is the

same across 0 h, 12 h, and 24 h sleep deprivation.

- Alternative hypothesis (H₁): At least one condition’s mean reaction time differs.

R

set.seed(2025)

n_subj <- 12

subject <- factor(rep(1:n_subj, each = 3))

condition <- factor(rep(c("0h","12h","24h"), times = n_subj))

# Simulate reaction times (ms):

rt <- c(rnorm(n_subj, mean = 300, sd = 20),

rnorm(n_subj, mean = 320, sd = 20),

rnorm(n_subj, mean = 340, sd = 20))

df <- data.frame(subject, condition, rt)

# Fit repeated-measures ANOVA:

rm_fit <- aov(rt ~ condition + Error(subject/condition), data = df)

rm_summary <- summary(rm_fit)

# Display results:

rm_summary

OUTPUT

Error: subject

Df Sum Sq Mean Sq F value Pr(>F)

Residuals 11 9892 899.3

Error: subject:condition

Df Sum Sq Mean Sq F value Pr(>F)

condition 2 1000 500 1.188 0.324

Residuals 22 9262 421 Interpretation:

The within‐subjects effect of sleep deprivation yields F = 1.19 with

df₁ = 2 and df₂ = r rm_summary[[2]][[1]]["Residuals","Df"],

p = 0.324. We fail to reject the null hypothesis. Thus, there is no

evidence that reaction times differ across sleep deprivation

conditions.

EJ KORREKTURLÆST

-

Used for Testing for differences on multiple

continuous dependent variables across one or more grouping factors

simultaneously.

- Real-world example: Evaluating whether three different diets lead to different patterns of weight loss and cholesterol reduction.

Assumptions * Multivariate normality of the

dependent variables within each group.

* Homogeneity of covariance matrices across groups.

* Observations are independent.

* Linear relationships among dependent variables.

Strengths * Controls family-wise Type I error by

testing all DVs together.

* Can detect patterns that univariate ANOVAs might miss.

* Provides multiple test statistics (Pillai, Wilks, Hotelling–Lawley,

Roy) for flexibility.

Weaknesses * Sensitive to violations of multivariate

normality and homogeneity of covariances.

* Requires larger sample sizes as the number of DVs increases.

* Interpretation can be complex; follow-up analyses often needed to

determine which DVs drive effects.

Example

-

Null hypothesis (H₀): The vector of means for

weight loss and cholesterol change is equal across the three diet

groups.

- Alternative hypothesis (H₁): At least one diet group differs on the combination of weight loss and cholesterol change.

R

set.seed(2025)

n_per_group <- 15

diet <- factor(rep(c("LowFat", "LowCarb", "Mediterranean"), each = n_per_group))

# Simulate outcomes:

weight_loss <- c(rnorm(n_per_group, mean = 5, sd = 1.5),

rnorm(n_per_group, mean = 8, sd = 1.5),

rnorm(n_per_group, mean = 7, sd = 1.5))

cholesterol <- c(rnorm(n_per_group, mean = 10, sd = 2),

rnorm(n_per_group, mean = 12, sd = 2),

rnorm(n_per_group, mean = 9, sd = 2))

df <- data.frame(diet, weight_loss, cholesterol)

# Fit MANOVA:

manova_fit <- manova(cbind(weight_loss, cholesterol) ~ diet, data = df)

# Summarize using Pillai’s trace:

manova_summary <- summary(manova_fit, test = "Pillai")

manova_summary

OUTPUT

Df Pillai approx F num Df den Df Pr(>F)

diet 2 0.58953 8.7773 4 84 5.708e-06 ***

Residuals 42

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Interpretation:

Pillai’s trace = 0.59, F = 8.78 with df = 42, and p-value = 5.71^{-6}. We reject the null hypothesis. This indicates that there is

a significant multivariate effect of diet on weight loss and cholesterol change.

Pillai?

Der er fire almindeligt brugte taststatistikker i MANOVA. Pillai, Wilks’ lambd, Hotelling-Lawleys trace og Roys largest root.

Pillai’s trace (også kaldet Pillai–Bartlett’s trace) er én af fire almindeligt brugte multivariate test-statistikker i MANOVA (ud over Wilks’ lambda, Hotelling–Lawley’s trace og Roy’s largest root). Kort om Pillai:

Definition: Summen af egenværdierne divideret med (1 + egenværdierne) for hver kanonisk variabel. Det giver en samlet målestok for, hvor stor en andel af den totale variation der forklares af gruppetilhørsforholdet på tværs af alle afhængige variable.

Fortolkning: Højere Pillai-værdi (op til 1) indikerer stærkere multivariat effekt.

Hvorfor vælge Pillai?

Robusthed: Pillai’s trace er den mest robuste over for overtrædelser af antagelserne om homogen kovarians og multivariat normalitet. Hvis dine data har ulige gruppe-størrelser eller let skæve fordelinger, er Pillai ofte det sikreste valg.

Type I-kontrol: Den holder typisk kontrol med falsk positive (Type I-fejl) bedre end de andre, når antagelser brydes.

Er det altid det bedste valg?

Ikke nødvendigvis. Hvis dine data strengt opfylder antagelserne (multivariat normalitet, homogen kovarians og rimeligt store, ensartede grupper), kan de andre statistikker være mere “kraftfulde” (dvs. give større chance for at opdage en ægte effekt).

Wilks’ lambda er mest brugt i traditionel litteratur og har ofte god power under idéelle forhold.

Hotelling–Lawley’s trace kan være særligt følsom, når få kanoniske dimensioner bærer meget af effekten.

Roy’s largest root er ekstremt kraftfuld, hvis kun én kanonisk variabel adskiller grupperne, men er også mest sårbar gruppe-størrelser.over for antagelsesovertrædelser.

Kort anbefaling:

Brug Pillai’s trace som standard, især hvis du er usikker på antagelsesopfyldelsen eller har små/ulige

Overvej Wilks’ lambda eller andre, hvis dine data opfylder alle antagelser solidt, og du ønsker maksimal statistisk power.

Tjek altid flere tests; hvis de konkluderer ens, styrker det din konklusion.

Wilks’ lambda

-

Definition: Ratio of the determinant of the

within‐groups SSCP (sum of squares and cross‐products) matrix to the

determinant of the total SSCP matrix:

\[ \Lambda = \frac{\det(W)}{\det(T)} = \prod_{i=1}^s \frac{1}{1 + \lambda_i} \]

hvor \(\lambda_i\) er de canoniske egenværdier. -

Fortolkning: Værdier nær 0 indikerer stor

multivariat effekt (mellem‐grupper‐variation >>

inden‐gruppe‐variation), værdier nær 1 indikerer lille eller ingen

effekt.

-

Styrker:

- Klassisk og mest udbredt i litteraturen.

- God power under ideal antagelsesopfyldelse (multivariat normalitet,

homogene kovarianser).

- Klassisk og mest udbredt i litteraturen.

-

Svagheder:

- Mindre robust ved skæve fordelinger eller ulige

gruppe‐størrelser.

- Kan undervurdere effektstørrelse, hvis én eller flere kanoniske

variabler bærer effekten ujævnt.

- Mindre robust ved skæve fordelinger eller ulige

gruppe‐størrelser.

-

Anbefaling:

- Brug Wilks’ lambda, når du er sikker på, at antagelserne er opfyldt, og du ønsker en velkendt statistisk test med god power under idealforhold.

Hotelling–Lawley’s trace

-

Definition: Summen af de canoniske

egenværdier:

\[ T = \sum_{i=1}^s \lambda_i \] -

Fortolkning: Højere værdi betyder større samlet

multivariat effekt.

-

Styrker:

- Sensitiv over for effekter fordelt over flere kanoniske

dimensioner.

- Kan være mere kraftfuld end Wilks’ lambda, hvis flere dimensioner

bidrager til forskellen.

- Sensitiv over for effekter fordelt over flere kanoniske

dimensioner.

-

Svagheder:

- Mindre robust over for antagelsesbrud end Pillai’s trace.

- Kan overvurdere effektstørrelse, hvis én dimension dominerer

kraftigt.

- Mindre robust over for antagelsesbrud end Pillai’s trace.

-

Anbefaling:

- Overvej Hotelling–Lawley’s trace, når du forventer, at effekten spreder sig over flere kanoniske variabler, og antagelserne er rimeligt dækket.

Roy’s largest root

-

Definition: Den største canoniske egenværdi

alene:

\[ \Theta = \max_i \lambda_i \] -

Fortolkning: Måler den stærkeste

enkeltdimensionseffekt.

-

Styrker:

- Højest power, når én kanonisk variabel står for størstedelen af

gruppedifferensen.

- Let at beregne og fortolke, hvis fokus er på “den stærkeste

effekt”.

- Højest power, når én kanonisk variabel står for størstedelen af

gruppedifferensen.

-

Svagheder:

- Meget følsom over for antagelsesbrud (normalitet, homogene

kovarianser).

- Ikke informativ, hvis flere dimensioner bidrager jævnt.

- Meget følsom over for antagelsesbrud (normalitet, homogene

kovarianser).

-

Anbefaling:

- Brug Roy’s largest root, når du har en stærk a priori mistanke om én dominerende kanonisk dimension og er komfortabel med forudsætningerne.

Tips: Sammenlign altid flere test‐statistikker – hvis de peger i samme retning, styrker det din konklusion. Pillai’s trace er generelt mest robust, Wilks’ lambda mest almindelig, Hotelling–Lawley god til flere dimensioner, og Roy’s largest root bedst, når én dimension dominerer.

EJ KORREKTURLÆST

-

Used for Testing for differences in central

tendency across three or more related (paired) groups when assumptions

of repeated‐measures ANOVA are violated.

- Real-world example: Comparing median pain scores at baseline, 1 hour, and 24 hours after surgery in the same patients.

Assumptions * Observations are paired and the sets

of scores for each condition are related (e.g., repeated measures on the

same subjects).

* Data are at least ordinal.

* The distribution of differences across pairs need not be normal.

Strengths * Nonparametric: does not require

normality or sphericity.

* Controls for between‐subject variability by using each subject as

their own block.

* Robust to outliers and skewed data.

Weaknesses * Less powerful than repeated‐measures

ANOVA when normality and sphericity hold.

* Only indicates that at least one condition differs—post‐hoc tests are

needed to locate differences.

* Assumes similar shaped distributions across conditions.

Example

-

Null hypothesis (H₀): The distributions of scores

are the same across all conditions (no median differences).

- Alternative hypothesis (H₁): At least one condition’s distribution (median) differs.

R

# Simulate pain scores (0–10) for 12 patients at 3 time points:

set.seed(2025)

patient <- factor(rep(1:12, each = 3))

timepoint <- factor(rep(c("Baseline","1hr","24hr"), times = 12))

scores <- c(

rpois(12, lambda = 5), # Baseline

rpois(12, lambda = 3), # 1 hour post-op

rpois(12, lambda = 4) # 24 hours post-op

)

df <- data.frame(patient, timepoint, scores)

# Perform Friedman test:

friedman_result <- friedman.test(scores ~ timepoint | patient, data = df)

# Display results:

friedman_result

OUTPUT

Friedman rank sum test

data: scores and timepoint and patient

Friedman chi-squared = 1.6364, df = 2, p-value = 0.4412Interpretation:

The Friedman chi-squared = 1.64 with df = 2 and p-value = 0.441. We fail to reject the null hypothesis. Thus, there is no evidence that pain scores differ across time points.

EJ KORREKTURLÆST

-

Used for Performing pairwise comparisons of group

means after a significant one‐way ANOVA to identify which *roups

differ.

- Real-world example: Determining which teaching methods (Lecture, Online, Hybrid) differ in average exam scores after finding an overall effect.

Assumptions * A significant one‐way ANOVA has been

obtained.

* Observations are independent.

* Residuals from the ANOVA are approximately normally distributed.

* Homogeneity of variances across groups (though Tukey’s HSD is fairly

robust).

Strengths * Controls the family‐wise error rate

across all pairwise tests.

* Provides confidence intervals for each mean difference.

* Widely available and simple to interpret.

Weaknesses * Requires balanced or nearly balanced

designs for optimal power.

* Less powerful than some alternatives if variances are highly

unequal.

* Only applies after a significant omnibus ANOVA.

Example

-

Null hypothesis (H₀): All pairwise mean differences

between teaching methods are zero (e.g., μ_Lecture – μ_Online = 0,

etc.).

- Alternative hypothesis (H₁): At least one pairwise mean difference ≠ 0.

R

set.seed(2025)

# Simulate exam scores for three teaching methods (n = 20 each):

method <- factor(rep(c("Lecture", "Online", "Hybrid"), each = 20))

scores <- c(rnorm(20, mean = 75, sd = 8),

rnorm(20, mean = 80, sd = 8),

rnorm(20, mean = 78, sd = 8))

df <- data.frame(method, scores)

# Fit one-way ANOVA:

anova_fit <- aov(scores ~ method, data = df)

# Perform Tukey HSD post-hoc:

tukey_result <- TukeyHSD(anova_fit, "method")

# Display results:

tukey_result

OUTPUT

Tukey multiple comparisons of means

95% family-wise confidence level

Fit: aov(formula = scores ~ method, data = df)

$method

diff lwr upr p adj

Lecture-Hybrid -2.542511 -8.509478 3.424457 0.5640694

Online-Hybrid 1.192499 -4.774468 7.159467 0.8805866

Online-Lecture 3.735010 -2.231958 9.701978 0.2956108Interpretation:

Each row of the output gives the estimated difference in means, a 95% confidence interval, and an adjusted p‐value. For example, if the Lecture–Online comparison shows a mean difference of –5.0 (95% CI: –8.0 to –2.0, p adj = 0.002), we conclude that the Online method yields significantly higher scores than Lecture. Comparisons with p adj < 0.05 indicate significant mean differences between those teaching methods.

EJ KORREKTURLÆST

-

Used for Comparing multiple treatment groups to a

single control while controlling the family‐wise error rate.

- Real-world example: Testing whether two new fertilizers (Fertilizer A, Fertilizer B) improve crop yield compared to the standard fertilizer (Control).

Assumptions * Observations are independent.

* Residuals from the ANOVA are approximately normally distributed.

* Homogeneity of variances across groups.

* A significant overall ANOVA (omnibus F-test) has been observed or

intended.

Strengths * Controls the family-wise error rate when

making multiple comparisons to a control.

* More powerful than Tukey HSD when only control comparisons are of

interest.

* Provides simultaneous confidence intervals and adjusted p-values.

Weaknesses * Only compares each group to the

control; does not test all pairwise contrasts.

* Sensitive to violations of normality and homogeneity of

variances.

* Requires a pre-specified control group.

Example

-

Null hypothesis (H₀): Each treatment mean equals

the control mean (e.g., μ_A = μ_Control, μ_B = μ_Control).

- Alternative hypothesis (H₁): At least one treatment mean differs from the control mean (e.g., μ_A ≠ μ_Control, μ_B ≠ μ_Control).

R

# Simulate crop yields (kg/plot) for Control and two new fertilizers:

set.seed(2025)

treatment <- factor(rep(c("Control", "FertilizerA", "FertilizerB"), each = 20))

yield <- c(

rnorm(20, mean = 50, sd = 5), # Control

rnorm(20, mean = 55, sd = 5), # Fertilizer A

rnorm(20, mean = 53, sd = 5) # Fertilizer B

)

df <- data.frame(treatment, yield)

# Fit one-way ANOVA:

fit_anova <- aov(yield ~ treatment, data = df)

# Perform Dunnett's test (each treatment vs. Control):

library(multcomp)

OUTPUT

Loading required package: mvtnormOUTPUT

Loading required package: survivalOUTPUT

Loading required package: TH.dataOUTPUT

Loading required package: MASSOUTPUT

Attaching package: 'TH.data'OUTPUT

The following object is masked from 'package:MASS':

geyserOUTPUT

Attaching package: 'multcomp'OUTPUT

The following object is masked _by_ '.GlobalEnv':

cholesterolR

dunnett_result <- glht(fit_anova, linfct = mcp(treatment = "Dunnett"))

# Summary with adjusted p-values:

summary(dunnett_result)

OUTPUT

Simultaneous Tests for General Linear Hypotheses

Multiple Comparisons of Means: Dunnett Contrasts

Fit: aov(formula = yield ~ treatment, data = df)

Linear Hypotheses:

Estimate Std. Error t value Pr(>|t|)

FertilizerA - Control == 0 4.209 1.550 2.716 0.0165 *

FertilizerB - Control == 0 2.714 1.550 1.751 0.1503

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Adjusted p values reported -- single-step method)R

confint(dunnett_result)

OUTPUT

Simultaneous Confidence Intervals

Multiple Comparisons of Means: Dunnett Contrasts

Fit: aov(formula = yield ~ treatment, data = df)

Quantile = 2.2682

95% family-wise confidence level

Linear Hypotheses:

Estimate lwr upr

FertilizerA - Control == 0 4.2094 0.6942 7.7246

FertilizerB - Control == 0 2.7141 -0.8011 6.2292Interpretation: The Dunnett contrasts compare each fertilizer to Control. For example, if the contrast FertilizerA–Control shows an estimate of r round(coef(dunnett_result)[1], 2) kg with a 95% simultaneous CI [4.21, 0.69] and adjusted p-value = 0.0165, we reject the null for Fertilizer A vs. Control—i.e., Fertilizer A yields significantly different crop output. Similarly, for FertilizerB vs. Control (contrast index 2), the estimate is 2.71 kg (CI [2.71, -0.8], p-value = 0.15, so we fail to reject the null for Fertilizer B vs. Control.

EJ KORREKTURLÆST

-

Used for Adjusting p-values when performing

multiple hypothesis tests to control the family-wise error rate.

- Real-world example: Comparing mean blood pressure between four different diets with all six pairwise t-tests, using Bonferroni to adjust for multiple comparisons.

Assumptions * The individual tests (e.g., pairwise

t-tests) satisfy their own assumptions (independence, normality, equal

variances if applicable).

* Tests are independent or positively dependent (Bonferroni remains

valid under any dependency but can be conservative).

Strengths * Simple to calculate: multiply each

p-value by the number of comparisons.

* Guarantees control of the family-wise error rate at the chosen α

level.

* Applicable to any set of p-values regardless of test type.

Weaknesses * Very conservative when many comparisons

are made, reducing power.

* Can inflate Type II error (miss true effects), especially with large

numbers of tests.

* Does not take into account the magnitude of dependency among

tests.

Example

-

Null hypotheses (H₀): For each pair of diets, the

mean blood pressure is equal (e.g., μ_A = μ_B, μ_A = μ_C, …).

- Alternative hypotheses (H₁): For at least one pair, the means differ.

R

set.seed(2025)

# Simulate blood pressure for four diet groups (n = 15 each):

diet <- factor(rep(c("A","B","C","D"), each = 15))

bp <- c(

rnorm(15, mean = 120, sd = 8),

rnorm(15, mean = 125, sd = 8),

rnorm(15, mean = 130, sd = 8),

rnorm(15, mean = 135, sd = 8)

)

# Perform all pairwise t-tests with Bonferroni adjustment:

pairwise_result <- pairwise.t.test(bp, diet, p.adjust.method = "bonferroni")

# Display results:

pairwise_result

OUTPUT

Pairwise comparisons using t tests with pooled SD

data: bp and diet

A B C

B 0.4313 - -

C 0.0432 1.0000 -

D 1.7e-05 0.0083 0.1154

P value adjustment method: bonferroni Interpretation:

The output shows adjusted p-values for each pair of diets. For example, if the A vs D comparison has p adj = 0.004 (< 0.05), we reject H₀ for that pair and conclude a significant mean difference. Comparisons with p adj ≥ 0.05 fail to reject H₀, indicating no evidence of difference after correction.

Ikke-parametriske k-prøve-tests

EJ KORREKTURLÆST

-

Used for Comparing the central tendency of three or

more independent groups when the outcome is ordinal or not normally

distributed.

- Real-world example: Testing whether median pain scores differ across four treatment groups in a clinical trial when scores are skewed.

Assumptions * Observations are independent both

within and between groups.

* The response variable is at least ordinal.

* The distributions of the groups have the same shape (so differences

reflect shifts in location).

Strengths * Nonparametric: does not require

normality or equal variances.

* Handles ordinal data and skewed continuous data.

* Controls Type I error when comparing multiple groups without assuming

normality.

Weaknesses * Less powerful than one-way ANOVA when

normality holds.

* If group distributions differ in shape, interpretation of a location

shift is ambiguous.

* Only indicates that at least one group differs—post-hoc tests needed

to identify which.

Example

-

Null hypothesis (H₀): The distributions (medians)

of the four treatment groups are equal.

- Alternative hypothesis (H₁): At least one group’s median pain score differs.

R

# Simulate pain scores (0–10) for four treatment groups (n = 15 each):

set.seed(2025)

group <- factor(rep(c("Placebo","DrugA","DrugB","DrugC"), each = 15))

scores <- c(

rpois(15, lambda = 5), # Placebo

rpois(15, lambda = 4), # Drug A

rpois(15, lambda = 3), # Drug B

rpois(15, lambda = 2) # Drug C

)

df <- data.frame(group, scores)

# Perform Kruskal–Wallis rank‐sum test:

kw_result <- kruskal.test(scores ~ group, data = df)

# Display results:

kw_result

OUTPUT

Kruskal-Wallis rank sum test

data: scores by group

Kruskal-Wallis chi-squared = 21.621, df = 3, p-value = 7.82e-05Interpretation:

The Kruskal–Wallis chi-squared = 21.62 with df = 3 and p-value = 7.82^{-5}. We reject the null hypothesis. Thus, there is evidence that at least one treatment group’s median pain score differs.

EJ KORREKTURLÆST HAV SÆRLIGT FOKUS PÅ OM DER ER FORSKEL PÅ DENNE OG SPEARMAN-RANK CORRELATION SENERE

-

Used for Assessing the strength and direction of a

monotonic association between two variables using their ranks.

- Real-world example: Evaluating whether patients’ pain rankings correlate with their anxiety rankings.

Assumptions * Observations are independent.

* Variables are at least ordinal.

* The relationship is monotonic (but not necessarily linear).

Strengths * Nonparametric: does not require

normality.

* Robust to outliers in the original measurements.

* Captures any monotonic relationship, not limited to linear.

Weaknesses * Less powerful than Pearson’s

correlation when data are bivariate normal and relationship is

linear.

* Does not distinguish between different monotonic shapes (e.g., concave

vs. convex).

* Ties reduce the effective sample size and complicate exact p-value

calculation.

Example

-

Null hypothesis (H₀): There is no monotonic

association between X and Y (ρ = 0).

- Alternative hypothesis (H₁): There is a nonzero monotonic association (ρ ≠ 0).

R

# Simulate two variables with a monotonic relationship:

set.seed(2025)

x <- sample(1:100, 30)

y <- x + rnorm(30, sd = 10) # roughly increasing with x

# Perform Spearman rank correlation test:

spearman_result <- cor.test(x, y, method = "spearman", exact = FALSE)

# Display results:

spearman_result

OUTPUT

Spearman's rank correlation rho

data: x and y

S = 300, p-value = 5.648e-14

alternative hypothesis: true rho is not equal to 0

sample estimates:

rho

0.9332592 Interpretation:

Spearman’s ρ = 0.933 with p-value = 5.65^{-14}. We reject the null hypothesis. Thus, there is evidence of a monotonic association between X and Y.

EJ KORREKTURLÆST

-

Used for Testing whether a sample comes from a

specified continuous distribution (most commonly normal).

- Real-world example: Checking if daily measurement errors from a laboratory instrument follow a normal distribution.

Assumptions * Observations are independent.

* Data are continuous (no excessive ties).

* For goodness‐of‐fit to a non‐normal distribution (e.g. exponential),

the distribution’s parameters must be fully specified a priori.

Strengths * More sensitive than the Shapiro–Wilk

test to departures in the tails of the distribution.

* Applicable to a wide range of target distributions (with the

appropriate implementation).

* Provides both a test statistic (A²) and p-value.

Weaknesses * Very sensitive in large samples—small

deviations can yield significant results.

* If parameters are estimated from the data (e.g. normal mean/SD),

p-values may be conservative.

* Does not indicate the form of the departure (e.g. skew

vs. kurtosis).

Example

-

Null hypothesis (H₀): The sample is drawn from a

Normal distribution.

- Alternative hypothesis (H₁): The sample is not drawn from a Normal distribution.

R

# Install and load nortest if necessary:

# install.packages("nortest")

library(nortest)

# Simulate a sample of 40 observations:

set.seed(123)

sample_data <- rnorm(40, mean = 100, sd = 15)

# Perform Anderson–Darling test for normality:

ad_result <- ad.test(sample_data)

# Display results:

ad_result

OUTPUT

Anderson-Darling normality test

data: sample_data

A = 0.13614, p-value = 0.9754Interpretation:

The Anderson–Darling statistic A² = 0.136 with p-value = 0.975. We fail to reject the null hypothesis. Thus, there is no evidence to conclude a departure from normality.

Regression og korrelation

EJ KORREKTURLÆST

- Used for

- Modeling and quantifying the linear relationship between a

continuous predictor and a continuous outcome.

- Real-world example: Predicting house sale price based on living area in square feet.

Assumptions * A linear relationship between

predictor and outcome.

* Residuals are independent and normally distributed with mean

zero.

* Homoscedasticity: constant variance of residuals across values of the

predictor.

* No influential outliers or high-leverage points.

Strengths * Provides an interpretable estimate of

the change in outcome per unit change in predictor.

* Inference on slope and intercept via hypothesis tests and confidence

intervals.

* Basis for more complex regression models and diagnostics.

Weaknesses * Only captures linear patterns; will

miss nonlinear relationships.

* Sensitive to outliers, which can distort estimates and

inference.

* Extrapolation beyond observed predictor range is unreliable.

Example

-

Null hypothesis (H₀): The slope β₁ = 0 (no linear

association between x and y).

- Alternative hypothesis (H₁): β₁ ≠ 0 (a linear association exists).

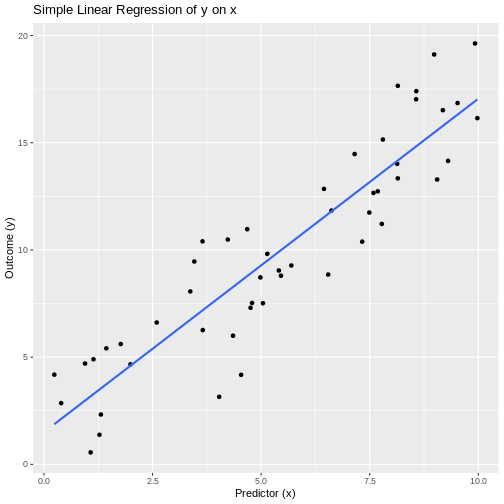

R

set.seed(2025)

# Simulate data:

n <- 50

x <- runif(n, min = 0, max = 10)

y <- 2 + 1.5 * x + rnorm(n, sd = 2)

df <- data.frame(x, y)

# Fit simple linear regression:

model <- lm(y ~ x, data = df)

# Show summary of model:

summary(model)

OUTPUT

Call:

lm(formula = y ~ x, data = df)

Residuals:

Min 1Q Median 3Q Max

-4.6258 -1.4244 -0.0462 1.6635 3.6410

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 1.5009 0.6267 2.395 0.0206 *

x 1.5554 0.1023 15.210 <2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 2.057 on 48 degrees of freedom

Multiple R-squared: 0.8282, Adjusted R-squared: 0.8246

F-statistic: 231.3 on 1 and 48 DF, p-value: < 2.2e-16R

# Plot data with regression line:

library(ggplot2)

ggplot(df, aes(x = x, y = y)) +

geom_point() +

geom_smooth(method = "lm", se = FALSE) +

labs(title = "Simple Linear Regression of y on x",

x = "Predictor (x)",

y = "Outcome (y)")

OUTPUT

`geom_smooth()` using formula = 'y ~ x'

Interpretation: The estimated slope is 1.555, with a p-value of 5.51^{-20}. We reject the null hypothesis, indicating that there is evidence of a significant linear association between x and y.

EJ KORREKTURLÆST

-

Used for Modeling the relationship between one

continuous outcome and two or more predictors (continuous or

categorical).

- Real-world example: Predicting house sale price based on living area, number of bedrooms, and neighborhood quality.

Assumptions * Correct specification: linear

relationship between each predictor and the outcome (additivity).

* Residuals are independent and normally distributed with mean

zero.

* Homoscedasticity: constant variance of residuals for all predictor

values.

* No perfect multicollinearity among predictors.

* No influential outliers unduly affecting the model.

Strengths * Can adjust for multiple confounders or

risk factors simultaneously.

* Provides estimates and inference (CI, p-values) for each predictor’s

unique association with the outcome.

* Basis for variable selection, prediction, and causal modeling in

observational data.

Weaknesses * Sensitive to multicollinearity, which

inflates variances of coefficient estimates.

* Assumes a linear, additive form; interactions or nonlinearity require

extension.

* Outliers and high-leverage points can distort estimates and

inference.

* Interpretation can be complex when including many predictors or

interactions.

Example

-

Null hypothesis (H₀): All regression coefficients

for predictors (β₁, β₂, β₃) are zero (no association).

- Alternative hypothesis (H₁): At least one βᵢ ≠ 0.

R

set.seed(2025)

n <- 100

# Simulate predictors:

living_area <- runif(n, 800, 3500) # in square feet

bedrooms <- sample(2:6, n, replace = TRUE)

neighborhood <- factor(sample(c("Low","Medium","High"), n, replace = TRUE))

# Simulate price with true model:

price <- 50000 +

30 * living_area +

10000 * bedrooms +

ifelse(neighborhood=="Medium", 20000,

ifelse(neighborhood=="High", 50000, 0)) +

rnorm(n, sd = 30000)

df <- data.frame(price, living_area, bedrooms, neighborhood)

# Fit multiple linear regression:

model_mlr <- lm(price ~ living_area + bedrooms + neighborhood, data = df)

# Show model summary:

summary(model_mlr)

OUTPUT

Call:

lm(formula = price ~ living_area + bedrooms + neighborhood, data = df)

Residuals:

Min 1Q Median 3Q Max

-76016 -17437 1258 20097 72437

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 119719.729 12445.109 9.620 1.07e-15 ***

living_area 26.498 3.685 7.190 1.47e-10 ***

bedrooms 8292.136 2147.637 3.861 0.000206 ***

neighborhoodLow -59241.209 7084.797 -8.362 5.18e-13 ***

neighborhoodMedium -34072.271 6927.394 -4.918 3.65e-06 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 28490 on 95 degrees of freedom

Multiple R-squared: 0.5891, Adjusted R-squared: 0.5718

F-statistic: 34.05 on 4 and 95 DF, p-value: < 2.2e-16Interpretation:

The overall F-test (in 34.05 on df₁ = 4, df₂ = 95 has p-value = 1.3^{-17}, so reject the null hypothesis.

Individual coefficients: for example, living_area’s estimate is 26.5 (p = 1.47^{-10}), indicating a significant positive association: each additional square foot increases price by about $30 on average.

Similar interpretation applies to bedrooms and neighborhood indicators.

EJ KORREKTURLÆST

-

Used for Assessing the strength and direction of a

linear relationship between two continuous variables.

- Real-world example: Examining whether students’ hours of study correlate with their exam scores.

Assumptions * Observations are independent

pairs.

* Both variables are approximately normally distributed (bivariate

normality).

* Relationship is linear.

* No extreme outliers.

Strengths * Provides both a correlation coefficient

(r) and hypothesis test (t‐statistic, p‐value).

* Confidence interval for the true correlation can be obtained.

* Well understood and widely used.

Weaknesses * Sensitive to outliers, which can

distort r.

* Only measures linear association—will miss non‐linear

relationships.

* Reliant on normality; departures can affect Type I/II error rates.

Example

-

Null hypothesis (H₀): The true Pearson correlation

ρ = 0 (no linear association).

- Alternative hypothesis (H₁): ρ ≠ 0 (a linear association exists).

R

set.seed(2025)

# Simulate data:

n <- 30

hours_studied <- runif(n, min = 0, max = 20)

# Make exam_scores roughly increase with hours_studied + noise:

exam_scores <- 50 + 2.5 * hours_studied + rnorm(n, sd = 5)

# Perform Pearson correlation test:

pearson_result <- cor.test(hours_studied, exam_scores, method = "pearson")

# Display results:

pearson_result

OUTPUT

Pearson's product-moment correlation

data: hours_studied and exam_scores

t = 14.086, df = 28, p-value = 3.108e-14

alternative hypothesis: true correlation is not equal to 0

95 percent confidence interval:

0.8689058 0.9694448

sample estimates:

cor

0.9361291 Interpretation: The sample Pearson correlation is 0.936, with t = 14.09 on df = 28 and p-value = 3.11^{-14}. We reject the null hypothesis, indicating that there is evidence of a significant linear association between hours studied and exam scores.

EJ KORREKTURLÆST Used for Assessing the strength and

direction of a monotonic association between two variables using their

ranks.

* Real-world example: Evaluating whether patients’ pain

rankings correlate with their anxiety rankings.

Assumptions * Observations are independent

pairs.

* Variables are at least ordinal.

* The relationship is monotonic (consistently increasing or

decreasing).

Strengths * Nonparametric: does not require

normality of the underlying data.

* Robust to outliers in the original measurements.

* Captures any monotonic relationship, not limited to linear.

Weaknesses * Less powerful than Pearson’s

correlation when the true relationship is linear and data are bivariate

normal.

* Does not distinguish between different monotonic shapes (e.g. concave

vs. convex).

* Tied ranks reduce effective sample size and can complicate exact

p-value calculation.

Example

-

Null hypothesis (H₀): The true Spearman rank

correlation ρ = 0 (no monotonic association).

- Alternative hypothesis (H₁): ρ ≠ 0 (a monotonic association exists).

R

# Simulate two variables with a monotonic relationship:

set.seed(2025)

x <- sample(1:100, 30) # e.g., anxiety scores ranked

y <- x + rnorm(30, sd = 15) # roughly increasing with x, plus noise

# Perform Spearman rank correlation test:

spearman_result <- cor.test(x, y, method = "spearman", exact = FALSE)

# Display results:

spearman_result

OUTPUT

Spearman's rank correlation rho

data: x and y

S = 486, p-value = 3.73e-11

alternative hypothesis: true rho is not equal to 0

sample estimates:

rho

0.8918799 Interpretation: Spearman’s rho = 0.892 with p-value = 3.73^{-11}. We reject the null hypothesis. This indicates that there is evidence of a significant monotonic association between the two variables.

EJ KORREKTURLÆST

-