Content from Reproducible Data Analysis

Last updated on 2026-02-03 | Edit this page

Estimated time: 12 minutes

Overview

Questions

- How do I ensure that my results can be reproduced?

Objectives

- Explain how to use markdown

- Demonstrate how to include pieces of code

Introduction

A key concept in the scientific process is reproducibility. We should be able to run the same experiment again, and get, more or less, the same result.

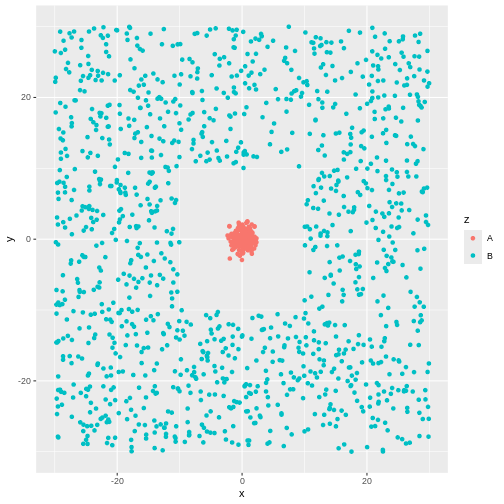

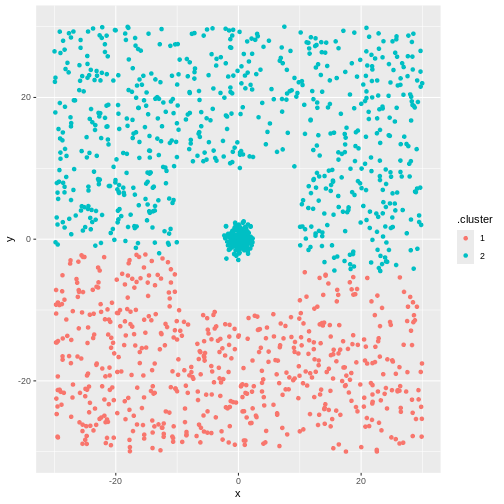

We will not always get the same result, applying the same functions on the same data - some statistical techniques relies on randomness.

An example is k-means, that clusters data based on randomly selected initial centroids.

This also applies to the analysis of data. If we have a collection of measurements of blood pressure from patients before and after they have taken an antihypertensive drug, we might arrive at the result that this specific drug is not working. Doing the same analysis tomorrow, we should reach the same result.

And that can be surprisingly difficult!

There are a lot of pitfalls, ranging from accessibility to incentive structures in academia. But the three areas where R can help us are:

- Software Environment

- Documentation and Metadata

- Complex Workflows

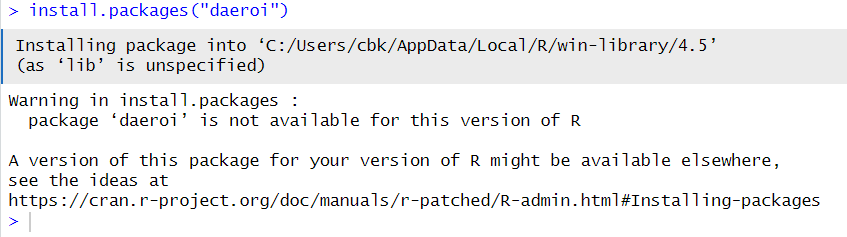

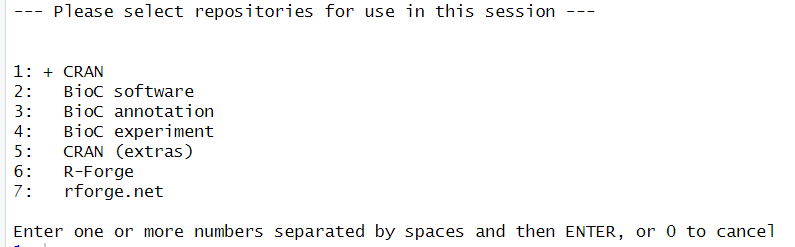

Software Environment

Data analysis is done using specific software, libraries or packages, in a variety of versions. And it happens in an environment on the computer that might not be identical from day to day.

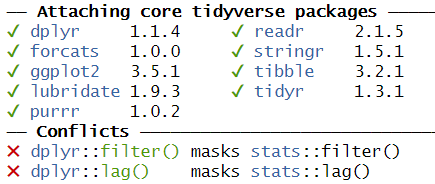

One example of these problems is shown every time we load tidyverse:

This message informs us that there is a filter()

function in the stats packages which is part of the core

R-installation. That function is masked by the filter()

function from the tidyverse´ packagedplyr`.

If our analysis relies on the way the filter() function

works in the tidyverse, we will get errors if

tidyverse is not loaded.

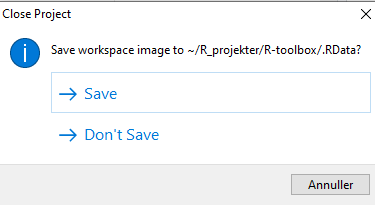

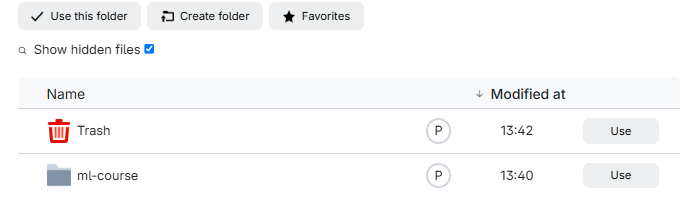

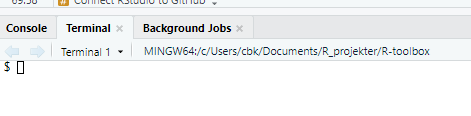

We might also have data stored in memory. Every time we close RStudio, we are asked if we want to save the environment:

This will save all the objects we have in our environment, in order for RStudio to be able to load them into memory when we open RStudio again.

That can be nice and useful. On the other hand we run the risk of

having the wrong version of the

my_data_that_is_ready_for_analysis dataframe lying around

in memory.

In addition we can experience performance problems. Storing a lot of large objects before closing RStudio can take a lot of time. And loading them into memory when opening RStudio will also take a lot of time.

On modern computers we normally have plenty of storage - but it is entirely possible to fill your harddrive with R-environments to the point where your computer crashes.

Documentation and Metadata

What did we actually do in the analysis? Why did we do it? Why are we reaching the conclusion we’ve arrived at?

Three very good questions. Having good metadata, data that describes your data, often makes understanding your data easier. Documenting the individual steps of your analysis, may not seem necessary right now - you know why you are doing what you are doing. But future you - you in three months, or some one else, might not remember or be able to guess (correctly).

Complex Workflows

Doing data analysis in eg Excel, can involve a lot of pointing and clicking.

And in any piece of software, the analysis will normally always involve more than one step. Those steps will have to be done in the correct order. Calculating a mean of some values, depends heavily on whether it happens before or after deleting irrelevant observations.

The solution to all of this!

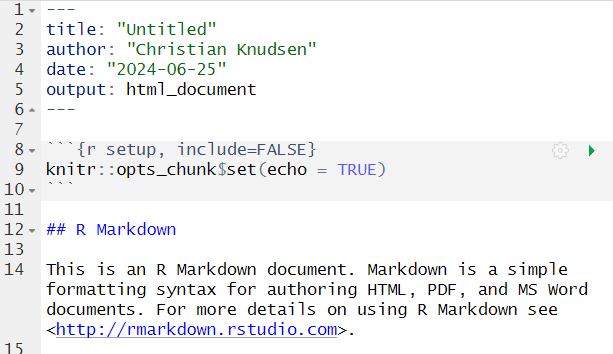

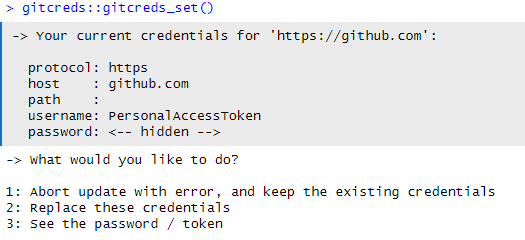

Working in RMarkdown allows us to collect the text describing our data, what and why we are doing what we do, the code actually doing it, and the results of that code - all in one document.

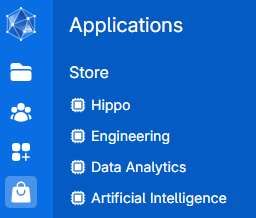

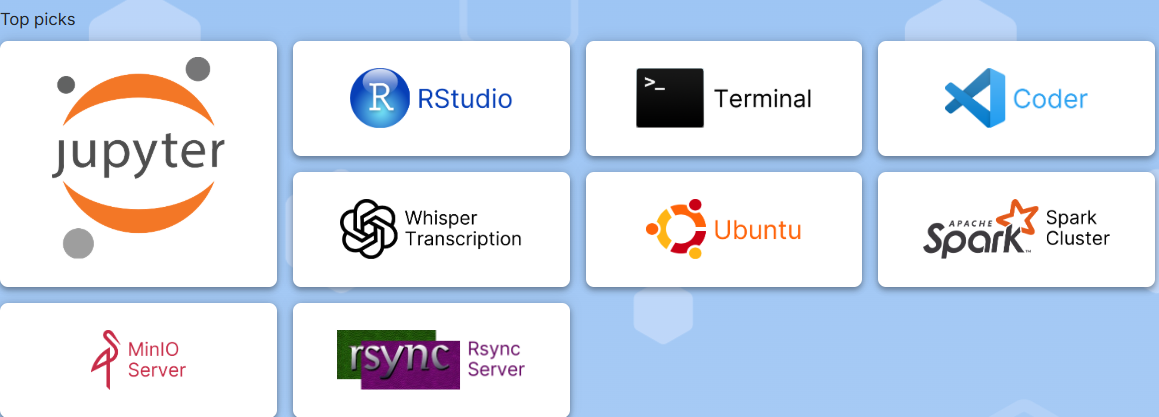

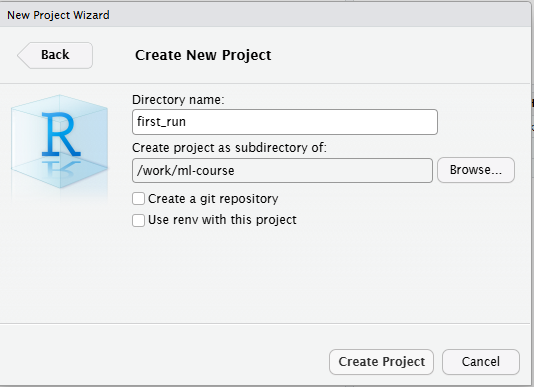

Open a new file, choose RMarkdown, and give your document a name:

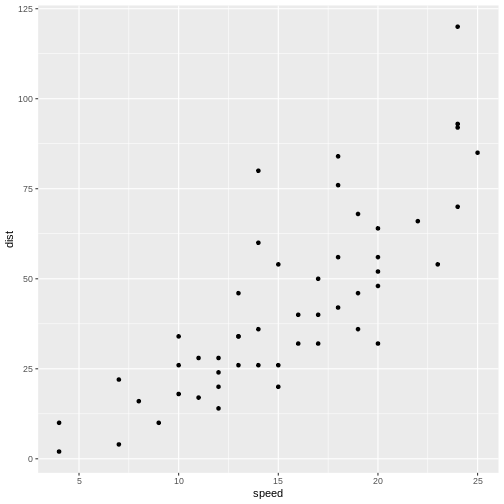

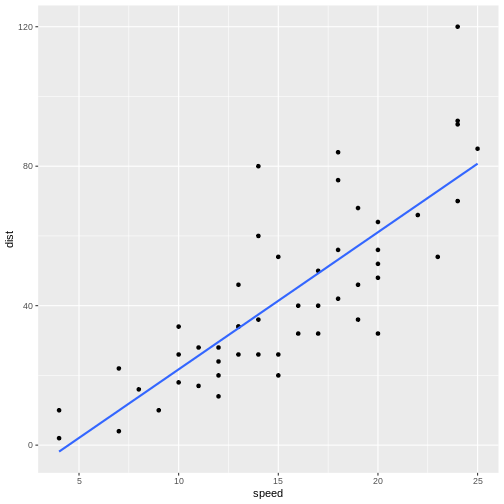

The code chunks, marked here with a light grey background, contains code, in this case not very advanced code. You can run the entire code chunk by clicking the green arrow on the right. Or by placing your cursor in the line of code you want to run, and pressing ctrl+enter (or command+enter on a Mac).

Outside the code chunks we can add our reasons for actually running

summary on the cars dataframe, and describe

what it contains.

You will see a new button in RStudio:

Clicking this, will “knit” your document; run each chunck of code, add the output to your document, and combine your code, the results and all your explanatory text to one html-document.

If you do not want an HTMl-document, you can knit to a MicroSoft Word document. Depending on your computer, you can knit directly to a pdf.

Having the entirety of your analysis in an RMarkdown document, and then running it, ensures that the individual steps in the analysis are run in the correct order.

It does not ensure that your documentation of what you do is written - it makes it easy to add it, but you still have to do it.

But what about the environment?

So we force ourself to have the steps in our analysis in the correct order, and we make it easy to add documentation. What about the environment?

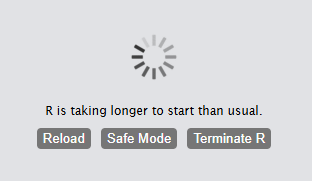

Working with RMarkdown also adresses this problem. Every time we

knit our document, RStudio opens a new session of R,

without libraries or objects in memory. This ensures that the analysis

is done in the exact same way each and every time.

This, on the other hand, requires us to add code chunks loading libraries and data to our document.

Try it yourself

Make a new RMarkdown document, add library(tidyverse) to

the first chunk, add your own text, and change the plot to plot the

distance variable from the cars data set.

Make a new RMarkdown document - File -> New File -> R Markdown.

Change the final code chunk to include plot(cars$dist)

instead of plot(pressure), and add library(tidyverse).

- Use RMarkdown to enforce reproducible analysis

Content from Reading data from file

Last updated on 2026-02-03 | Edit this page

Estimated time: 12 minutes

Overview

Questions

- How do you read in data from files?

Objectives

- Explain how to read in data from a selection of different data files.

Introduction

The first step of doing dataanalysis, is normally to read in the data.

Data can come from many different sources, and it is practically impossible to cover every possible format. Here we cover some of the more common.

The most important point is that there is a very high probability that at least one package exists that is designed to read a specific weird data format.

Use code!

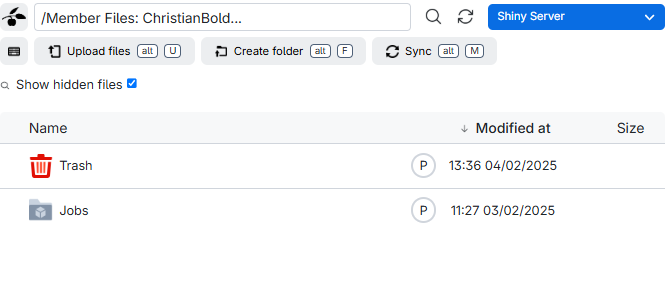

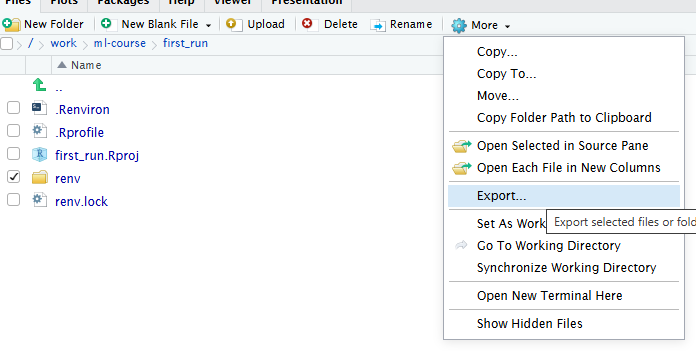

RStudio makes it simple to load most common data formats: Click on the file in the “Files” tab in RStudio, and choose “Import Dataset”:

RStudio will then provide an interface for loading the data:

However in general we prefer to have a script or a document, that can be run without us pointing and clicking. So - instead of importing the data in this way, copy the code that RStudio uses to import the data, and paste it into your script or document.

This option does not yet exist in Positron.

CSV-files

The most basic file type for storing and transferring data. A “simple” textfile, containing tabular data. One line of text for each row of data, each cell in that row, corresponding to a column, separated with a separator, typically a comma.

Many languages use commas as decimal separators. That neccesitates an option for using something else than a comma. Typically a semicolon.

Truly commaseparated files

Use read.csv() (from base-R) or read_csv()

(from readr, included in tidyverse)

We recommend using read_csv().

Semicolon separated files

Use read.csv2() (from base-R) or

read_csv2() (from readr, included in

tidyverse)

We recommend read_csv2()

What they have in common

read_csv and read_csv2 take a lot of

arguments that can control datatypes, handling of headers etc. For most

use, the default options are enough, but if you need to adjust

something, there are plenty of options for that.

guess_max

read_csv and read_csv2 tries to guess the

datatypes in the file, and will convert the data accordingly. That will

return a dataframe where date-time data is stored as such. The functions

by default reads the first 1000 rows, and makes a guess on the datatype

based on that.

That can lead to problems if the first 1000 rows of a column contain

numbers, and row 1001 contains text. In that case the entire row will be

coerced to numeric, and the following rows will contain

NA values. Adjust the argument guess_max to

something larger to catch this problem.

To include every row in the guess, add guess_max = Inf -

but be careful if you have a very large dataset.

Excel-files

Use the readxl package. Excel comes in two variants,

xls and xlsx. read_excel() makes

a qualified quess of the actual type your excel-file is. Should we need

to specify, we can use read_xls() or

read_xlsx().

Workbooks often contains more than one sheet. We can specify which we want to read in:

read_excel(path = "filename", sheet = 2)

Which will read in sheet number 2 from the workbook “filename”.

Read the documentation for details on how to read in specific cells

or ranges. You can find it running ?read_excel, or

help(read_excel).

SPSS

SPSS, originally “Statistical Package for the Social Sciences”, later renamed “Statistical Product and Service Solutions” is a proprietary statistical software suite developed by IBM.

Not surprisingly it is widely used in social science.

The package haven supports reading SPSS (Stata and SAS)

files

Use the package to read in spss files:

R

library(haven)

read_spss("filename")

The function returns a tibble.

Note that SPSS uses a variety of different formats.

read_spss() will make a guess of the correct format, but if

problems arise, try using one of the other functions provided in

haven

Stata

Stata is a proprietary statistical software package, used in a multitude of different fields, primarily biomedicine, epidemiololy, sociology and economics.

As mentioned above, the haven package provides functions

for reading Stata files:

R

library(haven)

read_stata("filename")

The function returns at tibble.

As with SPSS Stata uses a couple of different fileformats, and

read_stata makes a guess as to which format is used. If

problems arise, haven has more specific functions for

reading specific file formats.

SAS

SAS is a proprietary statistical software suite developed by SAS Institute.

The package haven can read SAS-files:

R

library(haven)

read_sas("filename")

The function returns at tibble.

As with SPSS and Stata, SAS uses a couple of different fileformats,

and read_sas tries to guess the correct format.

If problems arise, haven has more specific functions for

reading specific file formats.

JSON

Not all data come in a nice rectangular format, note the multiple phone numbers for the White House:

CountryUSA |

NameNASA |

Phonenumber

|

|

| White House |

(202)-456-1111 |

||

| Russia | Kremlin | 0107-095-295-9051 | |

| Vatican | The Pope | 011-39-6-6982 | |

There are two locations in the US, and one of them have two phone numbers. These kinds of structures, where one row contains data with more than one row (etc), are called nested, and are often stored or distributed in the JSON-format.

JSON can be read using fromJSON() from the

jsonlite library.

R

library(jsonlite)

fromJSON("filename")

Note that you will end up with nested columns - containing lists - which you probably will have to handle afterwards.

Other formats

In general if a piece of software is in widespread enough use that you encounter the weird file-format it uses, someone will have written a package for reading it. Google is your friend here!

Also, if you encounter a really weird dataformat, please send us an example so we can expand our knowledge.

- The

readrversion ofread_csv()is preferred - Remember that csv is not always actually separated with commas.

- The

havenpackage contains functions for reading common proprietary file formats. - In general a package will exist for reading strange datatypes. Google is your friend!

- Use code to read in your data

Content from Descriptive Statistics

Last updated on 2026-02-03 | Edit this page

Estimated time: 12 minutes

Overview

Questions

- How can we describe a set of data?

Objectives

- Learn about the most common ways of describing a variable

Introduction

Det kan være en udfordring hvis deltagene ikke ved hvad et gennemsnit er.

En af de overordnede pointer vi gerne vil frem til her, er percentilerne og det kumulative densitetsplot - der danner grundlag for forståelsen af normalfordelingens sammenhæng med statistiske tests.

Descriptive statistics involves summarising or describing a set of data. It usually presents quantitative descriptions in a short form, and helps to simplify large datasets.

Most descriptive statistical parameters applies to just one variable in our data, and includes:

| Central tendency | Measure of variation | Measure of shape |

|---|---|---|

| Mean | Range | Skewness |

| Median | Quartiles | Kurtosis |

| Mode | Inter Quartile Range | |

| Variance | ||

| Standard deviation | ||

| Percentiles |

Central tendency

The easiest way to get summary statistics on data is to use the

summarise function from the tidyverse

package.

R

library(tidyverse)

In the following we are working with the palmerpenguins

dataset. Note that the actual data is called penguins and

is part of the package palmerpenguins:

R

library(palmerpenguins)

head(penguins)

OUTPUT

# A tibble: 6 × 8

species island bill_length_mm bill_depth_mm flipper_length_mm body_mass_g

<fct> <fct> <dbl> <dbl> <int> <int>

1 Adelie Torgersen 39.1 18.7 181 3750

2 Adelie Torgersen 39.5 17.4 186 3800

3 Adelie Torgersen 40.3 18 195 3250

4 Adelie Torgersen NA NA NA NA

5 Adelie Torgersen 36.7 19.3 193 3450

6 Adelie Torgersen 39.3 20.6 190 3650

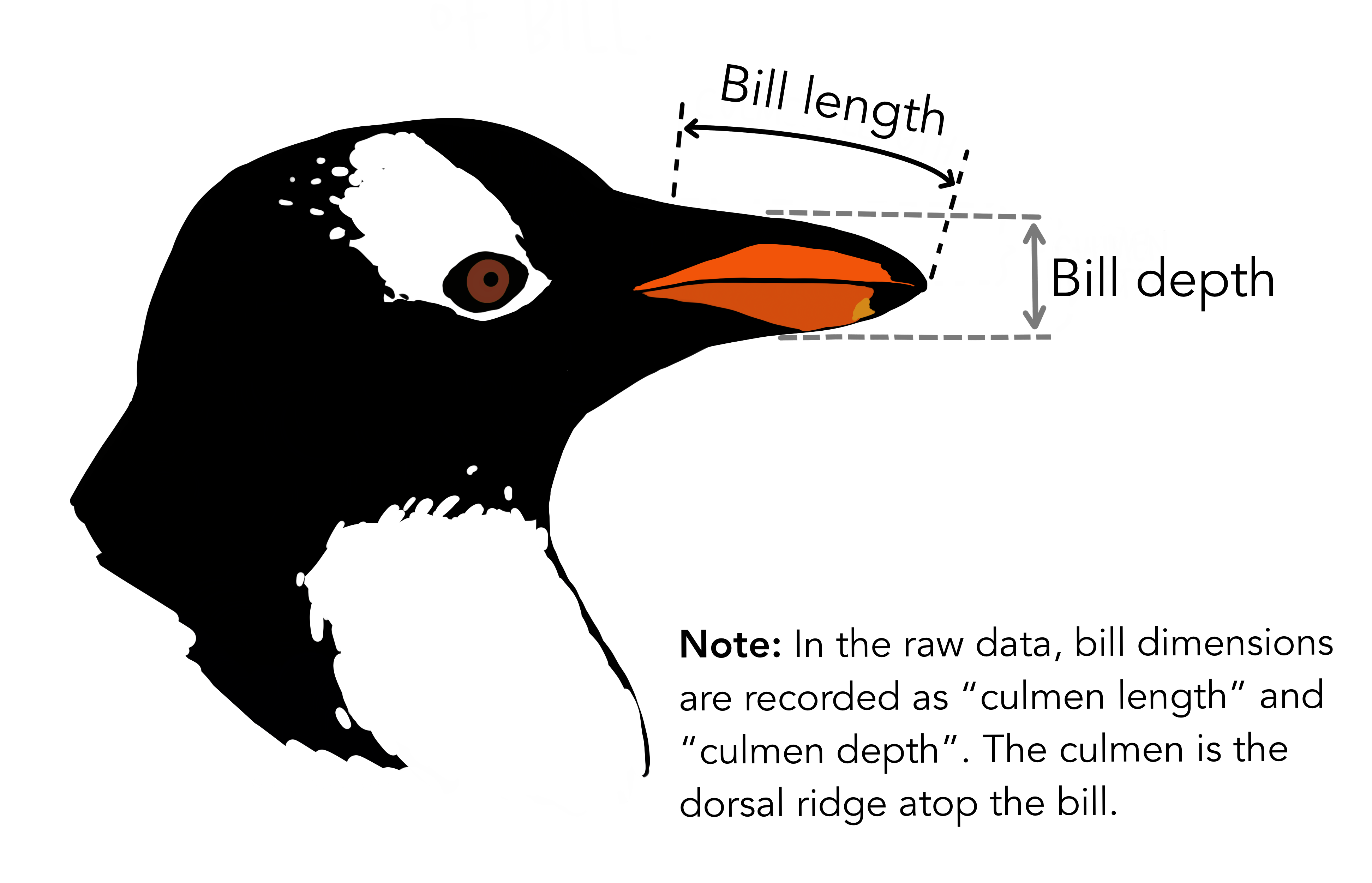

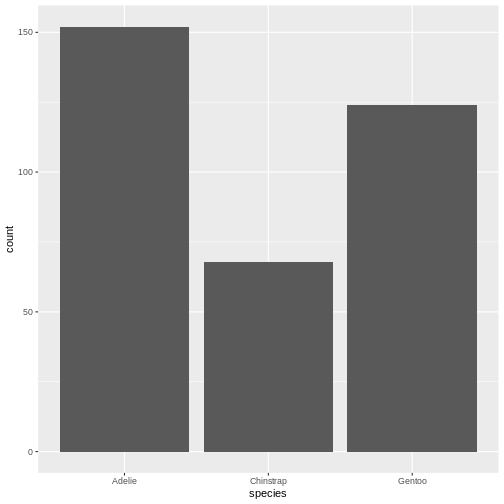

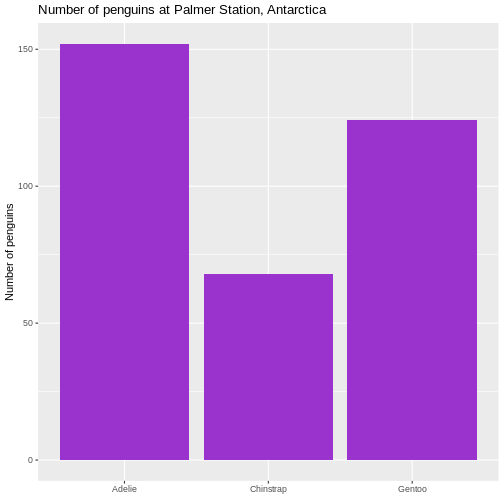

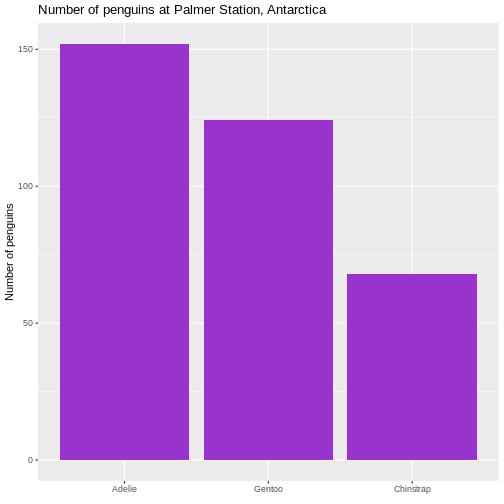

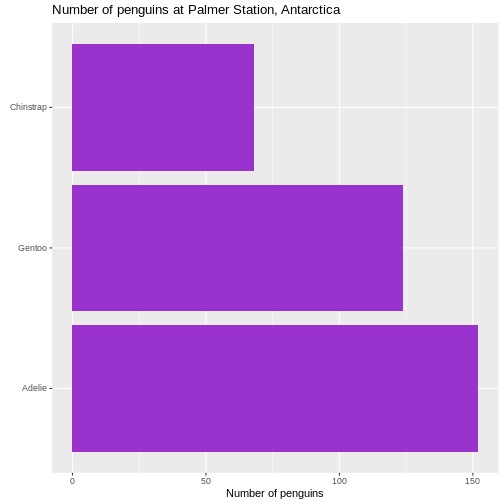

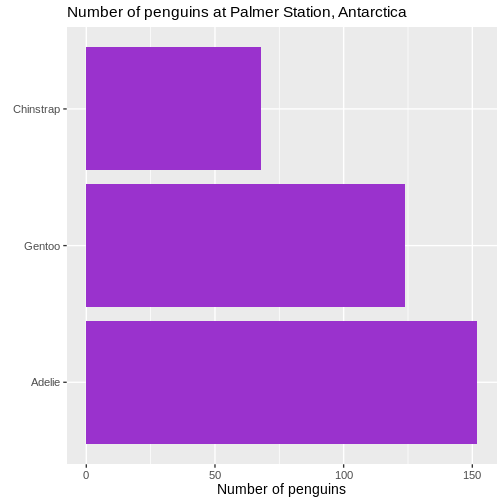

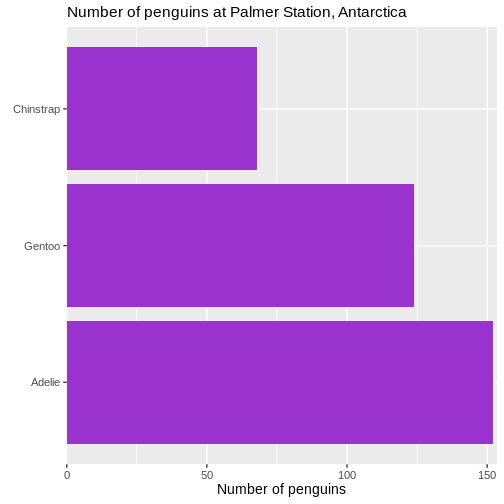

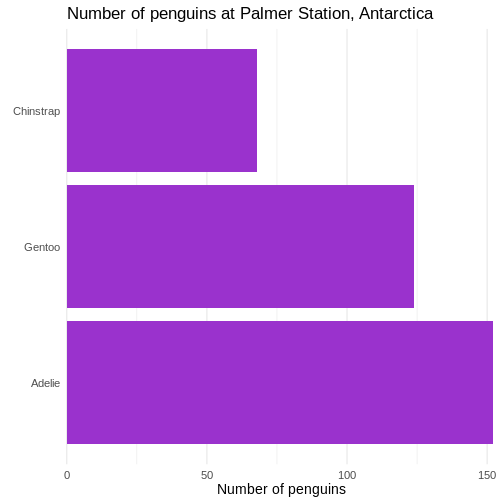

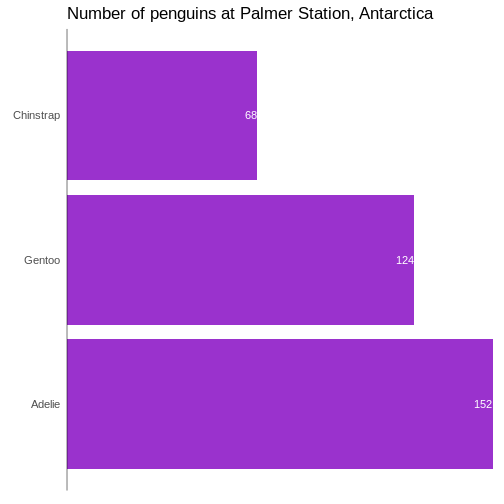

# ℹ 2 more variables: sex <fct>, year <int>344 penguins have been recorded at three different islands over three years. Three different penguin species are in the dataset, and we have data on their weight, sex, length of their flippers and two measurements of their bill (beak).

{Copyright Allison

Horst}

{Copyright Allison

Horst}

Specifically we are going to work with the weight of the penguins,

stored in the variable body_mass_g:

R

penguins$body_mass_g

OUTPUT

[1] 3750 3800 3250 NA 3450 3650 3625 4675 3475 4250 3300 3700 3200 3800 4400

[16] 3700 3450 4500 3325 4200 3400 3600 3800 3950 3800 3800 3550 3200 3150 3950

[31] 3250 3900 3300 3900 3325 4150 3950 3550 3300 4650 3150 3900 3100 4400 3000

[46] 4600 3425 2975 3450 4150 3500 4300 3450 4050 2900 3700 3550 3800 2850 3750

[61] 3150 4400 3600 4050 2850 3950 3350 4100 3050 4450 3600 3900 3550 4150 3700

[76] 4250 3700 3900 3550 4000 3200 4700 3800 4200 3350 3550 3800 3500 3950 3600

[91] 3550 4300 3400 4450 3300 4300 3700 4350 2900 4100 3725 4725 3075 4250 2925

[106] 3550 3750 3900 3175 4775 3825 4600 3200 4275 3900 4075 2900 3775 3350 3325

[121] 3150 3500 3450 3875 3050 4000 3275 4300 3050 4000 3325 3500 3500 4475 3425

[136] 3900 3175 3975 3400 4250 3400 3475 3050 3725 3000 3650 4250 3475 3450 3750

[151] 3700 4000 4500 5700 4450 5700 5400 4550 4800 5200 4400 5150 4650 5550 4650

[166] 5850 4200 5850 4150 6300 4800 5350 5700 5000 4400 5050 5000 5100 4100 5650

[181] 4600 5550 5250 4700 5050 6050 5150 5400 4950 5250 4350 5350 3950 5700 4300

[196] 4750 5550 4900 4200 5400 5100 5300 4850 5300 4400 5000 4900 5050 4300 5000

[211] 4450 5550 4200 5300 4400 5650 4700 5700 4650 5800 4700 5550 4750 5000 5100

[226] 5200 4700 5800 4600 6000 4750 5950 4625 5450 4725 5350 4750 5600 4600 5300

[241] 4875 5550 4950 5400 4750 5650 4850 5200 4925 4875 4625 5250 4850 5600 4975

[256] 5500 4725 5500 4700 5500 4575 5500 5000 5950 4650 5500 4375 5850 4875 6000

[271] 4925 NA 4850 5750 5200 5400 3500 3900 3650 3525 3725 3950 3250 3750 4150

[286] 3700 3800 3775 3700 4050 3575 4050 3300 3700 3450 4400 3600 3400 2900 3800

[301] 3300 4150 3400 3800 3700 4550 3200 4300 3350 4100 3600 3900 3850 4800 2700

[316] 4500 3950 3650 3550 3500 3675 4450 3400 4300 3250 3675 3325 3950 3600 4050

[331] 3350 3450 3250 4050 3800 3525 3950 3650 3650 4000 3400 3775 4100 3775How can we describe these values?

Mean

The mean is the average of all datapoints. We add all values

(excluding the missing values encoded with NA), and divide

with the number of observations:

\[\overline{x} = \frac{1}{N}\sum_1^N x_i\]

Where N is the number of observations, and \(x_i\) is the individual observations in the sample \(x\).

The easiest way of getting the mean is using the mean()

function. Adding the na.rm = TRUE argument removes missing

values before the calculation is done:

R

mean(penguins$body_mass_g, na.rm = TRUE)

OUTPUT

[1] 4201.754A slightly more cumbersome way is using the summarise()

function from tidyverse:

R

penguins |>

summarise(avg_mass = mean(body_mass_g, na.rm = T))

OUTPUT

# A tibble: 1 × 1

avg_mass

<dbl>

1 4202.As we will see below, this function streamlines the process of getting multiple descriptive values.

Barring significant outliers, mean is an expression of

position of the data. This is the weight we would expect a random

penguin in our dataset to have.

However, we have three different species of penguins in the dataset, and they have quite different average weights. There is also a significant difference in the average weight for the two sexes.

We will get to that at the end of this segment.

Median

Similarly to the average/mean, the median is an

expression of the location of the data. If we order our data by size,

from the smallest to the largest value, and locate the middle

observation, we get the median. This is the value that half of the

observations is smaller than. And half the observations is larger.

R

median(penguins$body_mass_g, na.rm = TRUE)

OUTPUT

[1] 4050We can note that the mean is larger than the median. This indicates that the data is skewed, in this case toward the larger penguins.

We can get both median and mean in one go

using the summarise() function:

R

penguins |>

summarise(median = median(body_mass_g, na.rm = TRUE),

mean = mean(body_mass_g, na.rm = TRUE))

OUTPUT

# A tibble: 1 × 2

median mean

<dbl> <dbl>

1 4050 4202.This illustrates for the learners that we can calculate more than one summary statistics in one summarise function.

Mode

Mode is the most common, or frequently occurring, observation. R does not have a build-in function for this, but we can easily find the mode by counting the different observations,and locating the most common one.

We typically do not use this for continous variables. The mode of the

sex variable in this dataset can be found like this:

R

penguins |>

count(sex) |>

arrange(desc(n))

OUTPUT

# A tibble: 3 × 2

sex n

<fct> <int>

1 male 168

2 female 165

3 <NA> 11We count the different values in the sex variable, and

arrange the counts in descending order (desc). The mode of

the sex variable is male.

In this specific case, we note that the dataset is pretty evenly balanced regarding the two sexes.

Measures of variance

Knowing where the observations are located is interesting. But how do they vary? How can we describe the variation in the data?

Range

The simplest information about the variation is the range. What is the smallest and what is the largest value? Or, what is the spread?

We can get that by using the min() and

max() functions in a summarise() function:

R

penguins |>

summarise(min = min(body_mass_g, na.rm = T),

max = max(body_mass_g, na.rm = T))

OUTPUT

# A tibble: 1 × 2

min max

<int> <int>

1 2700 6300There is a dedicated function, range(), that does the

same. However it returns two values (for each row), and the summarise

function expects to get one value.

If we would like to use the range() function, we can add

it using the reframe() function instead of

summarise():

R

penguins |>

reframe(range = range(body_mass_g, na.rm = T))

OUTPUT

# A tibble: 2 × 1

range

<int>

1 2700

2 6300Hvorfor ikke bare bruge reframe generelt? Kognitivt load. Summarise angiver hvad det er vi gør. Vi summariser noget data. Reframe angiver hvordan vi omdanner et output til en dataframe. Resultatet bliver det samme, men vi taler ikke længere om hvad det egentlig er vi er ude efter i operationen.

Variance

The observations varies. They are not all located at the mean (or median), but are spread out on both sides of the mean. Can we get a numerical value describing that?

An obvious way would be to calculate the difference between each of the observations and the mean, and then take the average of those differences.

That will give us the average deviation. But we have a problem. The average weight of penguins was 4202 (rounded). Look at two penguins, one weighing 5000, and another weighing 3425. The differences are:

- 5000 - 4202 = 798

- 3425 - 4202 = -777

The sum of those two differences is: -777 + 798 = 21 g. And the average is then 10.5 gram. That is not a good estimate of a variation from the mean of more than 700 gram.

The problem is, that the differences can be both positive and negative, and might cancel each other out.

We solve that problem by squaring the differences, and calculate the mean of those.

Why not just averaging the absolute values? Using the square rather than the absolute difference, weighs the deviations so larger deviations have relatively larger influence on the variance. Squaring results in a continous and differentiable function, which helps in situations where we have to do an optimisation. Also the normal distribution is defined by the variance as defined here, and we would really like to get a connection between what we observe here, and the normal distribution.

For the population variance, the mathematical notation would be:

\[ \sigma^2 = \frac{\sum_{i=1}^N(x_i - \mu)^2}{N} \]

Population or sample?

Why are we suddenly using \(\mu\) instead of \(\overline{x}\)? Because this definition uses the population mean. The mean, or average, in the entire population of all penguins everywhere in the universe. But we have not weighed all those penguins.

And the sample variance:

\[ s^2 = \frac{\sum_{i=1}^N(x_i - \overline{x})^2}{N-1} \]

Note that we also change the \(\sigma\) to an \(s\).

And again we are not going to do that by hand, but will ask R to do it for us:

R

penguins |>

summarise(

variance = var(body_mass_g, na.rm = T)

)

OUTPUT

# A tibble: 1 × 1

variance

<dbl>

1 643131.Et godt spørgsmål vil være - hvorfor dividerer vi med N-1 i stedet for N?

Det kaldes for en “Bessel korrektion”. Den ene årsag til at vi gør det er, at godt nok er gennemsnittet i stikprøven et godt estimat for gennemsnittet i populationen. Men det er ikke præcis det samme. Når vi dividerer med et mindre tal, får vi en større værdi for variancen - og dermed et mere konservativt, eller forsigtigt, estimat på variansen.

Den anden årsag handler om frihedsgrader. Hvis vi har tre værdier og et gennemsnit, kan vi vælge hvad de to af værdierne er, og hvad gennemsnittet er. Helt frit. Men den tredie værdi er givet. Den kan vi ikke vælge frit.

Standard deviation

There is a problem with the variance. It is 643131, completely off scale from the actual values. There is also a problem with the unit which is in \(g^2\).

A measurement of the variation of the data would be the standard deviation, simply defined as the square root of the variance:

R

penguins |>

summarise(

s = sd(body_mass_g, na.rm = T)

)

OUTPUT

# A tibble: 1 × 1

s

<dbl>

1 802.Since the standard deviation occurs in several statistical tests, it is more frequently used than the variance. It is also more intuitively relateable to the mean.

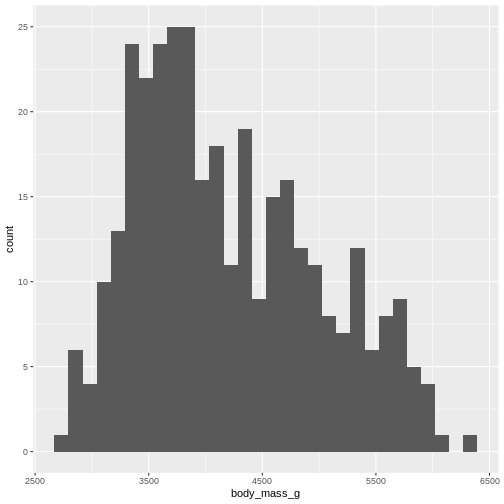

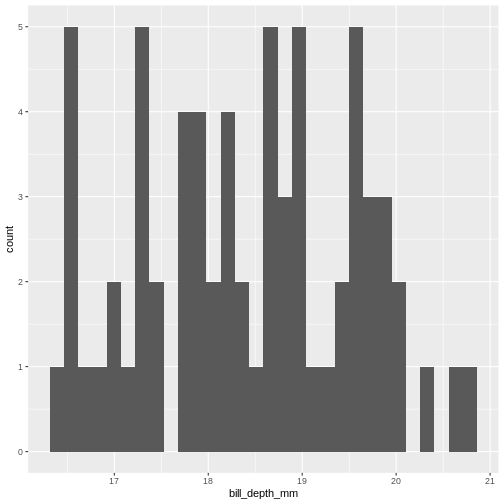

A histogram

A visual illustration of the data can be nice. Often one of the first we make, is a histogram.

A histogram is a plot or graph where we split the range of observations in a number of “buckets”, and count the number of observations in each bucket:

R

penguins |>

select(body_mass_g) |>

filter(!is.na(body_mass_g)) |>

mutate(buckets = cut(body_mass_g, breaks=seq(2500,6500,500))) |>

group_by(buckets) |>

summarise(antal = n())

OUTPUT

# A tibble: 8 × 2

buckets antal

<fct> <int>

1 (2.5e+03,3e+03] 11

2 (3e+03,3.5e+03] 67

3 (3.5e+03,4e+03] 92

4 (4e+03,4.5e+03] 57

5 (4.5e+03,5e+03] 54

6 (5e+03,5.5e+03] 33

7 (5.5e+03,6e+03] 26

8 (6e+03,6.5e+03] 2Typically, rather than counting ourself, we leave the work to R, and make a histogram directly:

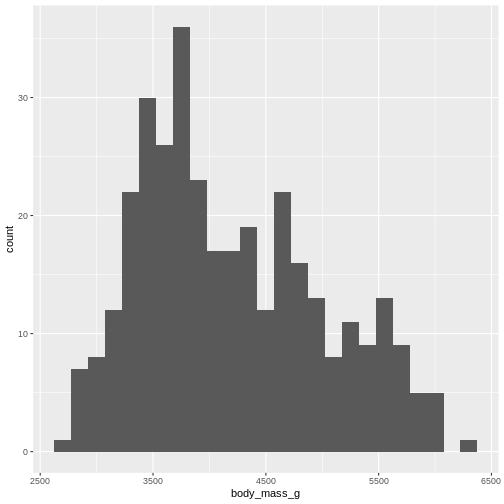

R

penguins |>

ggplot((aes(x=body_mass_g))) +

geom_histogram()

OUTPUT

`stat_bin()` using `bins = 30`. Pick better value `binwidth`.WARNING

Warning: Removed 2 rows containing non-finite outside the scale range

(`stat_bin()`).

By default ggplot chooses 30 bins, typically we should chose a different number:

R

penguins |>

ggplot((aes(x=body_mass_g))) +

geom_histogram(bins = 25)

WARNING

Warning: Removed 2 rows containing non-finite outside the scale range

(`stat_bin()`).

Or, ideally, set the widths of them, manually:

R

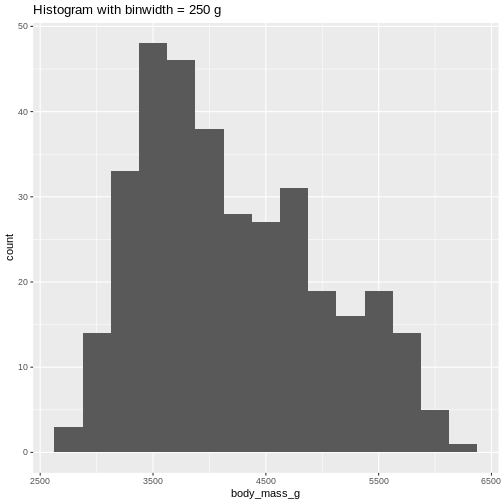

penguins |>

ggplot((aes(x=body_mass_g))) +

geom_histogram(binwidth = 250) +

ggtitle("Histogram with binwidth = 250 g")

WARNING

Warning: Removed 2 rows containing non-finite outside the scale range

(`stat_bin()`). Or even specify the exact intervals we want, here intervals from 0 to

6500 gram in intervals of 250 gram:

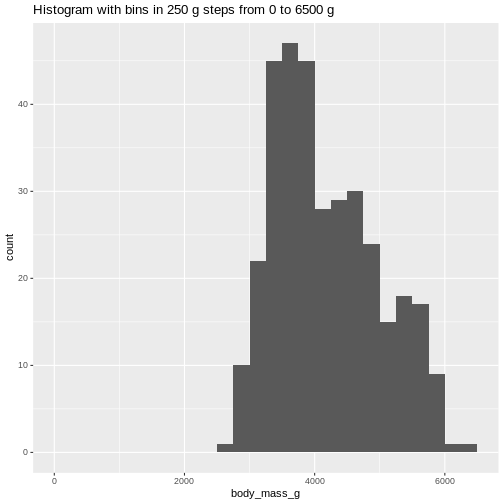

Or even specify the exact intervals we want, here intervals from 0 to

6500 gram in intervals of 250 gram:

R

penguins |>

ggplot((aes(x=body_mass_g))) +

geom_histogram(breaks = seq(0,6500,250)) +

ggtitle("Histogram with bins in 250 g steps from 0 to 6500 g")

WARNING

Warning: Removed 2 rows containing non-finite outside the scale range

(`stat_bin()`). The histogram provides us with a visual indication of both range, the

variation of the values, and an idea about where the data is

located.

The histogram provides us with a visual indication of both range, the

variation of the values, and an idea about where the data is

located.

Quartiles

The median can be understood as splitting the data in two equally sized parts, where one is characterized by having values smaller than the median and the other as having values larger than the median. It is the value where 50% of the observations are smaller.

Similary we can calculate the value where 25% of the observations are smaller.

That is often called the first quartile, where the median is the 50%, or second quartile. Quartile implies four parts, and the existence of a third or 75% quartile.

We can calcultate those using the quantile function:

R

quantile(penguins$body_mass_g, probs = .25, na.rm = T)

OUTPUT

25%

3550 and

R

quantile(penguins$body_mass_g, probs = .75, na.rm = T)

OUTPUT

75%

4750 probs because if we select a random penguin, we have a 25% chance of selecting a penguin that weighs less than 3550 gram. This ties in to percentiles and qq-plots.

We are often interested in knowing the range in which 50% of the observations fall.

That is used often enough that we have a dedicated function for it:

R

penguins |>

summarise(iqr = IQR(body_mass_g, na.rm = T))

OUTPUT

# A tibble: 1 × 1

iqr

<dbl>

1 1200The name of the quantile function implies that we might have other quantiles than quartiles. Actually we can calculate any quantile, eg the 2.5% quantile:

R

quantile(penguins$body_mass_g, probs = .025, na.rm = T)

OUTPUT

2.5%

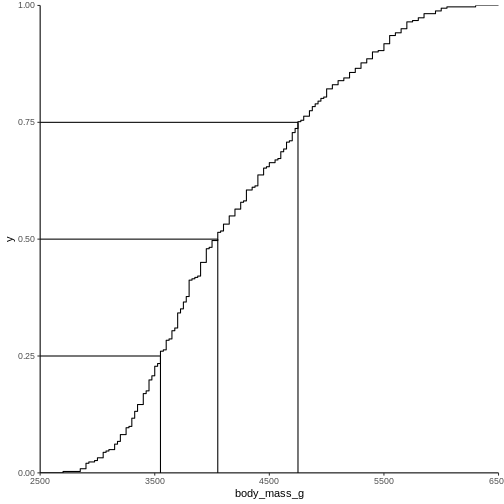

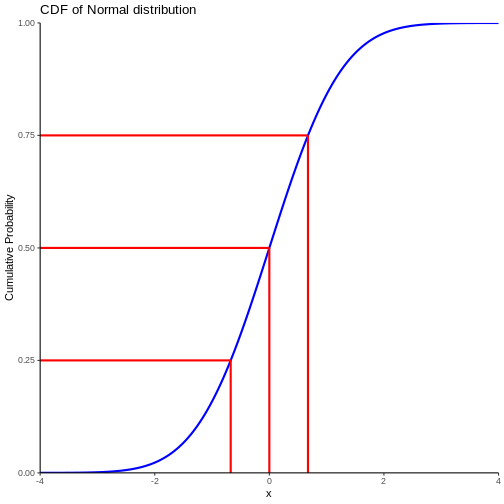

2988.125 The individual quantiles can be interesting in themselves. If we want a visual representation of all quantiles, we can calculate all of them, and plot them.

Instead of doing that by hand, we can use a concept called CDF or cumulative density function:

R

CDF <- ecdf(penguins$body_mass_g)

CDF

OUTPUT

Empirical CDF

Call: ecdf(penguins$body_mass_g)

x[1:94] = 2700, 2850, 2900, ..., 6050, 6300That was not very informative. Lets plot it:

den skal vi nok have beskrevet lidt mere.

Men pointen er, at vi for enhver værdi kan aflæse ting. Hvor stor en andel af pingvinerne vejer mindre end 3000 g? Vi kan finde 3000 på x-aksen, og aflæse den matchende værdi på y-aksen.

Det svarer også til - hvis vi tager en tilfældig pingvin, hvad er så sandsynligheden for at den vejer mindre end 3000 gram? Eller for at den vejer mere end 5000 gram?

Measures of shape

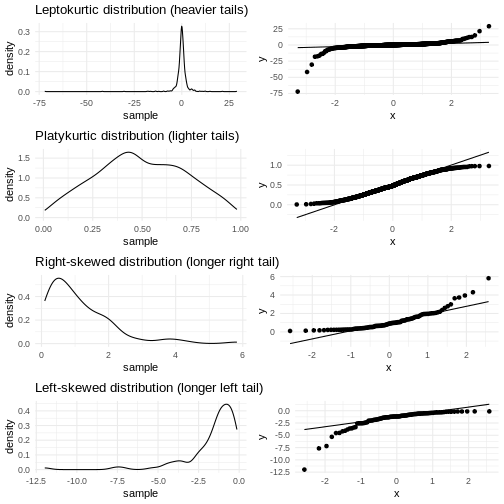

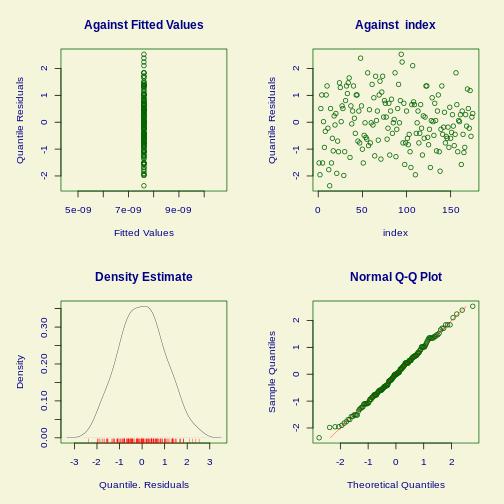

Skewness

We previously saw a histogram of the data, and noted that the observations were skewed to the left, and that the “tail” on the right was longer than on the left. That skewness can be quantised.

There is no function for skewness build into R, but we can get it

from the library e1071

R

library(e1071)

OUTPUT

Attaching package: 'e1071'OUTPUT

The following object is masked from 'package:ggplot2':

elementR

skewness(penguins$body_mass_g, na.rm = T)

OUTPUT

[1] 0.4662117The skewness is positive, indicating that the data are skewed to the left, just as we saw. A negative skewness would indicate that the data skew to the right.

Nej, det er ikke på nogen måde intuitivt. Vi må nok finde ud af hvorfor konventionen er som den er.

Kurtosis

Another parameter describing the shape of the data is kurtosis. We can think of that as either “are there too many observations in the tails?” leading to a relatively low peak. Or, as “how pointy is the peak” - because the majority of observations are centered in the peak, rather than appearing in the tails.

We use the e1071 package again:

R

kurtosis(penguins$body_mass_g, na.rm = T)

OUTPUT

[1] -0.73952Kurtosis is defined weirdly, and here we get “excess” kurtosis, the actual kurtosis minus 3. We have negative kurtosis, indicating that the peak is flat, and the tails are fat.

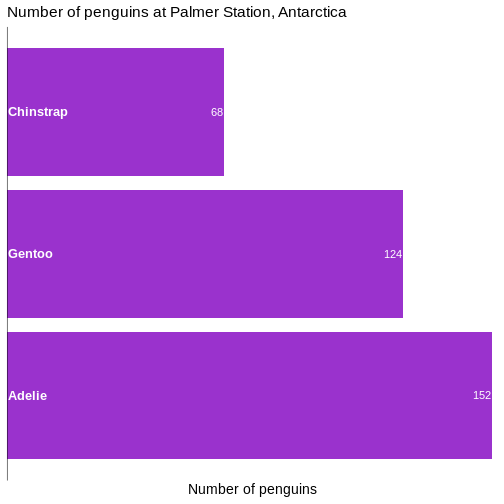

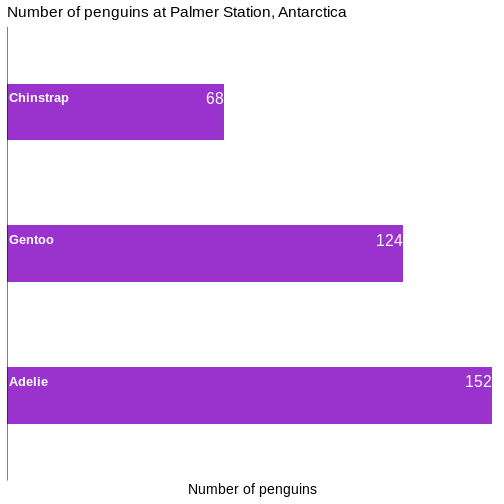

Everything Everywhere All at Once

A lot of these descriptive values can be gotten for every variable in

the dataset using the summary function:

R

summary(penguins)

OUTPUT

species island bill_length_mm bill_depth_mm

Adelie :152 Biscoe :168 Min. :32.10 Min. :13.10

Chinstrap: 68 Dream :124 1st Qu.:39.23 1st Qu.:15.60

Gentoo :124 Torgersen: 52 Median :44.45 Median :17.30

Mean :43.92 Mean :17.15

3rd Qu.:48.50 3rd Qu.:18.70

Max. :59.60 Max. :21.50

NA's :2 NA's :2

flipper_length_mm body_mass_g sex year

Min. :172.0 Min. :2700 female:165 Min. :2007

1st Qu.:190.0 1st Qu.:3550 male :168 1st Qu.:2007

Median :197.0 Median :4050 NA's : 11 Median :2008

Mean :200.9 Mean :4202 Mean :2008

3rd Qu.:213.0 3rd Qu.:4750 3rd Qu.:2009

Max. :231.0 Max. :6300 Max. :2009

NA's :2 NA's :2 Here we get the range, the 1st and 3rd quantiles (and from those the IQR), the median and the mean and, rather useful, the number of missing values in each variable.

We can also get all the descriptive values in one table, by adding more than one summarizing function to the summarise function:

R

penguins |>

summarise(min = min(body_mass_g, na.rm = T),

max = max(body_mass_g, na.rm = T),

mean = mean(body_mass_g, na.rm = T),

median = median(body_mass_g, na.rm = T),

stddev = sd(body_mass_g, na.rm = T),

var = var(body_mass_g, na.rm = T),

Q1 = quantile(body_mass_g, probs = .25, na.rm = T),

Q3 = quantile(body_mass_g, probs = .75, na.rm = T),

iqr = IQR(body_mass_g, na.rm = T),

skew = skewness(body_mass_g, na.rm = T),

kurtosis = kurtosis(body_mass_g, na.rm = T)

)

OUTPUT

# A tibble: 1 × 11

min max mean median stddev var Q1 Q3 iqr skew kurtosis

<int> <int> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 2700 6300 4202. 4050 802. 643131. 3550 4750 1200 0.466 -0.740As noted, we have three different species of penguins in the dataset. Their weight varies a lot. If we want to do the summarising on each for the species, we can group the data by species, before summarising:

R

penguins |>

group_by(species) |>

summarise(min = min(body_mass_g, na.rm = T),

max = max(body_mass_g, na.rm = T),

mean = mean(body_mass_g, na.rm = T),

median = median(body_mass_g, na.rm = T),

stddev = sd(body_mass_g, na.rm = T)

)

OUTPUT

# A tibble: 3 × 6

species min max mean median stddev

<fct> <int> <int> <dbl> <dbl> <dbl>

1 Adelie 2850 4775 3701. 3700 459.

2 Chinstrap 2700 4800 3733. 3700 384.

3 Gentoo 3950 6300 5076. 5000 504.We have removed some summary statistics in order to get a smaller table.

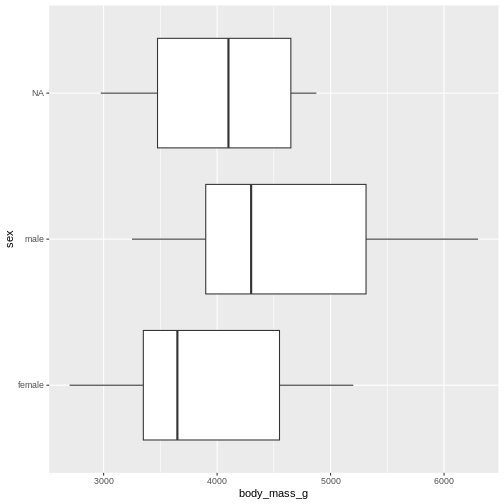

Boxplots

Finally boxplots offers a way of visualising some of the summary statistics:

R

penguins |>

ggplot(aes(x=body_mass_g, y = sex)) +

geom_boxplot()

WARNING

Warning: Removed 2 rows containing non-finite outside the scale range

(`stat_boxplot()`).

The boxplot shows us the median (the fat line in the middel of each box), the 1st and 3rd quartiles (the ends of the boxes), and the range, with the whiskers at each end of the boxes, illustrating the minimum and maximum. Any observations, more than 1.5 times the IQR from either the 1st or 3rd quartiles, are deemed as outliers and would be plotted as individual points in the plot.

Counting

Most of the descriptive functions above are focused on continuous variables, maybe grouped by one or more categorical variables.

What about the categorical themselves?

The one thing we can do looking only at categorical variables, is counting.

Counting the different values in a single categorical variable in

base-R is done using the table(() function

R

table(penguins$sex)

OUTPUT

female male

165 168 Often we are interested in cross tables, tables where we count the different combinations of the values in more than one categorical variable, eg the distribution of the two different penguin sexes on the three different islands:

R

table(penguins$island, penguins$sex)

OUTPUT

female male

Biscoe 80 83

Dream 61 62

Torgersen 24 23We can event group on three (or more) categorical variables, but the output becomes increasingly difficult to read the mote variables we add:

R

table(penguins$island, penguins$sex, penguins$species)

OUTPUT

, , = Adelie

female male

Biscoe 22 22

Dream 27 28

Torgersen 24 23

, , = Chinstrap

female male

Biscoe 0 0

Dream 34 34

Torgersen 0 0

, , = Gentoo

female male

Biscoe 58 61

Dream 0 0

Torgersen 0 0Aggregate

A different way of doing that in base-R is using the

aggregate() function:

R

aggregate(sex ~ island, data = penguins, FUN = length)

OUTPUT

island sex

1 Biscoe 163

2 Dream 123

3 Torgersen 47Here we construct the crosstable using the formula notation, and

specify which function we want to apply on the results. This can be used

to calculate summary statistics on groups, by adjusting the

FUN argument.

Counting in tidyverse

In tidyverse we will typically group the data by the variables we want to count, and then tallying them:

R

penguins |>

group_by(sex) |>

tally()

OUTPUT

# A tibble: 3 × 2

sex n

<fct> <int>

1 female 165

2 male 168

3 <NA> 11group_by works equally well with more than one

group:

R

penguins |>

group_by(sex, species) |>

tally()

OUTPUT

# A tibble: 8 × 3

# Groups: sex [3]

sex species n

<fct> <fct> <int>

1 female Adelie 73

2 female Chinstrap 34

3 female Gentoo 58

4 male Adelie 73

5 male Chinstrap 34

6 male Gentoo 61

7 <NA> Adelie 6

8 <NA> Gentoo 5But the output is in a long format, and often requires some manipulation to get into a wider tabular format.

A shortcut exists in tidyverse, count, which combines

group_by and tally:

R

penguins |>

count(sex, species)

OUTPUT

# A tibble: 8 × 3

sex species n

<fct> <fct> <int>

1 female Adelie 73

2 female Chinstrap 34

3 female Gentoo 58

4 male Adelie 73

5 male Chinstrap 34

6 male Gentoo 61

7 <NA> Adelie 6

8 <NA> Gentoo 5- We have access to a lot of summarising descriptive indicators the the location, spread and shape of our data.

Content from Histograms

Last updated on 2026-02-03 | Edit this page

Estimated time: 0 minutes

Overview

Questions

- What is a histogram?

- What do we use histograms for?

- What is the connection between number of bins, binwidths and breaks?

- How do we chose a suitable number of bins?

Objectives

- Understand what a histogram is, and what it is used for

- Introduce a number of heuristics for chosing the “correct” number of bins

What even is a histogram?

We use histograms to visualise the distribution of some continuous variable. It have values between a minimum and maximum value (range), but are these values equally probable? Probably not.

In a histogram we divide the range in a number of bins, count the number of observations in each bin, and make a plot with a bar for each bin, where the height is equivalent to the number of observations in each bin.

Normally the bins have equal width - equal to the range of the variable (max - min), divided by the number of bins.

How do we do that?

Let us look at some data - penguin data:

R

library(tidyverse)

library(palmerpenguins)

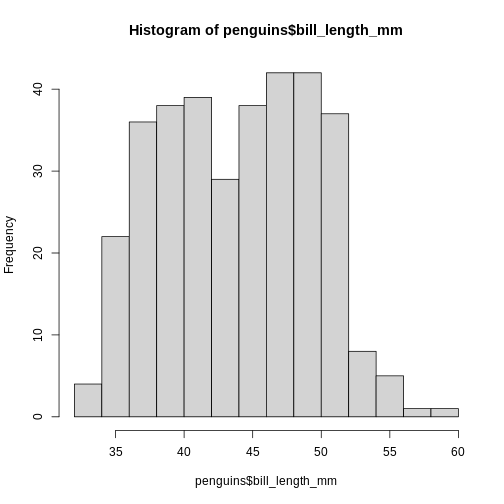

R has a build-in histogram function, hist(), which will

make a basic histogram, of the data we provide it with:

R

hist(penguins$bill_length_mm)

This is not a very nice looking histogram, and ggplot2

provides us with a more easily customisable

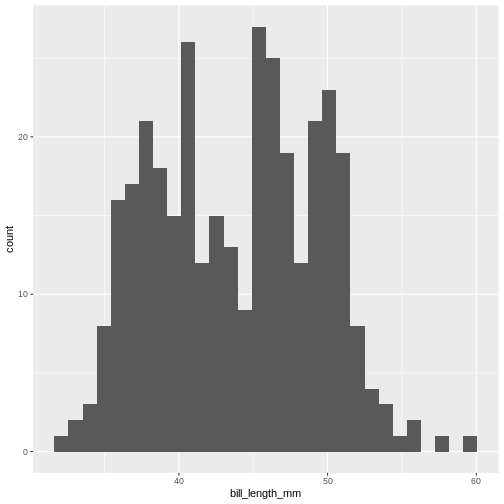

geom_histogram() function:

R

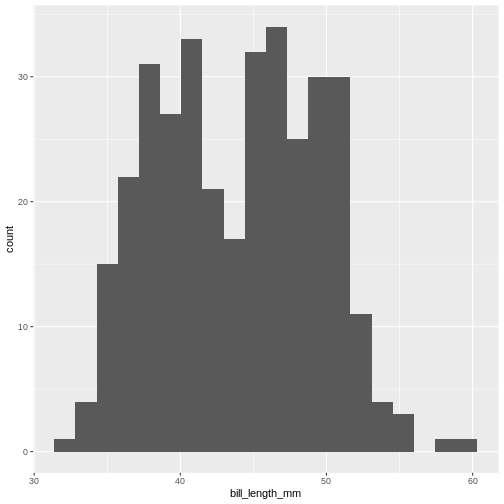

ggplot(penguins, aes(x=bill_length_mm)) +

geom_histogram()

OUTPUT

`stat_bin()` using `bins = 30`. Pick better value `binwidth`.WARNING

Warning: Removed 2 rows containing non-finite outside the scale range

(`stat_bin()`).

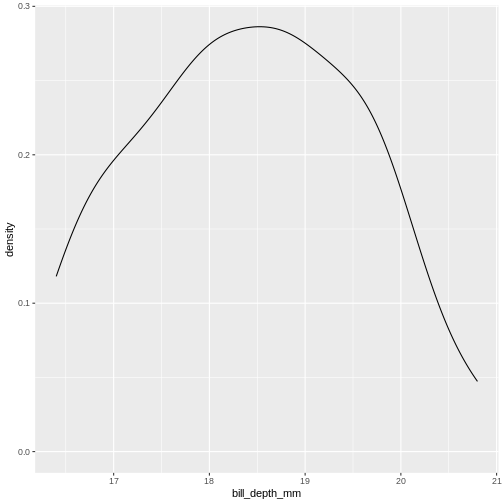

This is the distibution of the length, in millimeter, of the bill (or beak) of a selection of penguins.

By default the geom_histogram() function divides the

range of the data (min = 32.1, max = 59.6 mm) into 30 bins. Each bin

therefore have a width of 0.9167 mm. The first bin contains a single

penguin, that has a beak that is between 32.1 and 32.1 + 0.9187 mm

long.

From this plot we get information about the distribution of bill-lengths of penguins.

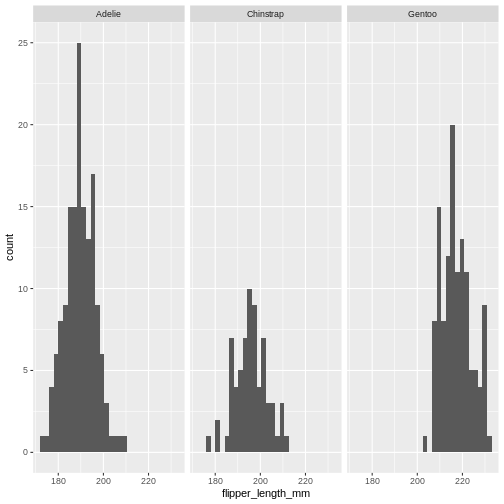

There appears to be several different “peaks” in the plot. This is to be expected as we have three different species of penguins in the data, in addition to having penguins of both sexes.

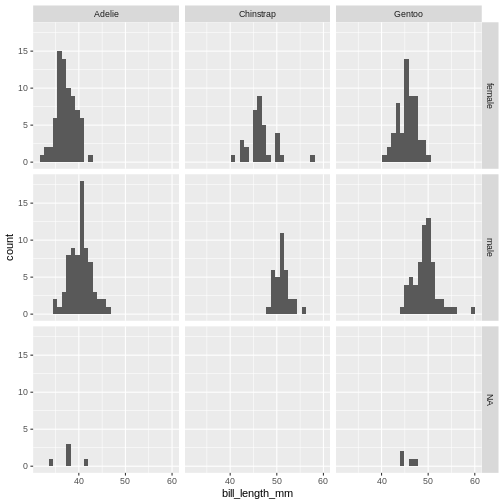

If we want to split the histogram into these different groups, we can

use the possibilities available in ggplot2:

R

ggplot(penguins, aes(x=bill_length_mm)) +

geom_histogram() +

facet_grid(sex~species)

OUTPUT

`stat_bin()` using `bins = 30`. Pick better value `binwidth`.WARNING

Warning: Removed 2 rows containing non-finite outside the scale range

(`stat_bin()`).

The data now looks a bit more normally distributed. And we can observe that male penguins tend to have longer beaks than female penguins. We can also see that the different species of penguins have different bill lengths.

How many bins?

Note the warning we get.

“using bins = 30. Pick better value with

binwidth”

The choice of number of bins have a big influence on how the data is

visualised. By providing the geom_histogram() function with

an argument, bins = xx where xx is the number

of bins we can adjust the number of bins:

Try it yourself

Try to plot the bill_length_mm variable in the penguins dataset with different numbers of bins.

R

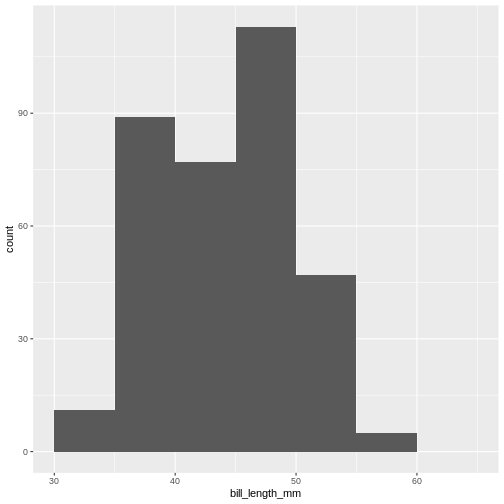

ggplot(penguins, aes(x=bill_length_mm)) +

geom_histogram(bins = 20)

WARNING

Warning: Removed 2 rows containing non-finite outside the scale range

(`stat_bin()`).

By default geom_histogram() choses 30 bins. This default

value has been chosen because it is almost never the correct choice.

ggplot2 is an opinionated package, that tries to force us

to make better plots. And this is the reason for the warning.

How many bins should we chose?

We should chose the number of bins that best tells the story we want to tell about our data. But also the number of bins that does not hide information about our data.

The obvious way to do this is to fiddle with the number of bins, and find the number that best do that.

But that can feel a bit cheaty. How do we rationalise the number of bins in a way that makes sure that this number is not just the one that show what we want the data to show, but the number that actually show the data as it is?

A number of heuristics for chosing the “correct” number of bins exist.

A heuristic is a fancy way of saying “rule of thumb”. “Heuristic” sounds like we know what we are talking about.

Different heuristics for chosing number of bins.

To have an approximately consistent way of describing them, we use these three values:

- k for the number of bins

- h for the width of the bins

- n for the number of observations

We also use a couple of mathematical signs: \(\lceil\) and \(\rceil\). These are “ceiling” functions, and simply mean that we round a number up. Rather than rounding 4.01 to 4, we round up to 5.

Freedman-Diaconis

This heuristic determines the bind-width as:

\[h = 2 \cdot IQR \cdot n^{-1/3}\]

where IQR is the interquartile range of our data, which can be found

using the IQR() function.

The number of bins are then found as

\[ k = \lceil \frac{range}{h} \rceil \]

Try it yourself

How many bins does Freedman-Diaconis prescribe for making a histogram of the bill length of the penguins?

Get all the lengths of the penguin bills, excluding missing values:

R

bill_lengths <- penguins$bill_length_mm |>

na.omit()

Find \(n\):

R

n <- length(bill_lengths)

And the inter quartile range:

R

iqr <- IQR(bill_lengths)

Now find the recommended bin-width:

R

h <- 2*iqr*n^(-1/3)

And then the number of bins:

R

k <- (max(bill_lengths) - min(bill_lengths))/h

Remember to take the ceiling:

R

ceiling(k)

OUTPUT

[1] 11Square root rule

The number of bins \(k\) are found directly from the number of observations, n:

\[k = \lceil\sqrt{n}\rceil\]

Try it yourself

According to the Square Root rule, how many bins should we use for a histogram of the length of penguin bills?

Get all the lengths of the penguin bills, excluding missing values:

R

bill_lengths <- penguins$bill_length_mm |>

na.omit()

Find \(n\):

R

n <- length(bill_lengths)

And calculate the square root of \(n\), rounded up using the

ceiling() function:

R

ceiling(sqrt(n))

OUTPUT

[1] 19The Sturges rule

Here we also find \(k\) directly from the number of observations:

\[ k = \lceil\log_2(n) + 1\rceil \] An implicit assumption is that the data is approximately normally distributed.

\(\log_2\) is found using the

log2() function.

Try it yourself

According to the Sturgess rule, how many bins should we use for a histogram of the length of penguin bills?

Get all the lengths of the penguin bills, excluding missing values:

R

bill_lengths <- penguins$bill_length_mm |>

na.omit()

Find \(n\):

R

n <- length(bill_lengths)

Now find \(k\)

R

k <- log2(n) + 1

And remember to round up:

R

ceiling(k)

OUTPUT

[1] 10The Rice Rule

Again we get \(k\) directly from \(n\):

\[k = \lceil 2n^{1/3}\rceil\]

Try it yourself

According to the Rice rule, how many bins should we use for a histogram of the length of penguin bills?

Get all the lengths of the penguin bills, excluding missing values:

R

bill_lengths <- penguins$bill_length_mm |>

na.omit()

Find \(n\):

R

n <- length(bill_lengths)

And then find \(k\):

R

k <- 2*n^(1/3)

Remember to round up:

R

ceiling(k)

OUTPUT

[1] 14Doanes Rule

This rule is a bit more complicated:

\[k= 1 + \log_2(n) + \log_2(1+ |g_1|/\sigma_{g1})\]

\(|g_1|\) is the absolute value of

the estimated 3rd moment skewness of the data, found by using the

skewness() function from the e1071 package,

and \(\sigma_{g1}\) is found by:

\[\sigma_{g1} = \sqrt{6(n-2)/((n+1)(n+3))}\]

Doanes rule basically adds extra bins based on how skewed the data is, and works better than Sturges’ rule for non-normal distributions.

Try it yourself

According to Doanes rule, how many bins should we use for a histogram of the length of penguin bills?

Get all the lengths of the penguin bills, excluding missing values:

R

bill_lengths <- penguins$bill_length_mm |>

na.omit()

Find \(n\):

R

n <- length(bill_lengths)

Find \(g_1\):

R

library(e1071)

OUTPUT

Attaching package: 'e1071'OUTPUT

The following object is masked from 'package:ggplot2':

elementR

g1 <- skewness(bill_lengths)

Find \(\sigma_{g1}\):

R

s_g1 <- sqrt(6*(n-2)/((n+1)*(n+3)))

Now we can find \(k\). Remember to take the absolute value of \(g_1\)

R

k <- 1 + log2(n) + log2(1+abs(g1)/s_g1)

And then round up \(k\) to get the number of bins:

R

ceiling(k)

OUTPUT

[1] 10Scott’s rule

Here we get the bin-width \(h\) using this expression:

\[h = 3.49 \frac{\sigma}{n^{1/3}}\] Where \(\sigma\) is the standard deviation of the data. This rule implicitly assumes that the data is normally distributed.

Try it yourself

According to Scott’s rule, how many bins should we use for a histogram of the length of penguin bills?

Get all the lengths of the penguin bills, excluding missing values:

R

bill_lengths <- penguins$bill_length_mm |>

na.omit()

Find \(n\):

R

n <- length(bill_lengths)

Find \(\sigma\):

R

sigma <- sd(bill_lengths)

Now we can find the bin-width \(h\):

R

h <- 3.49*sigma*(n^(-1/3))

And from that, the number of bins \(k\):

R

k <- (max(bill_lengths) - min(bill_lengths))/h

Remember to round up \(k\):

R

ceiling(k)

OUTPUT

[1] 11That is difficult

Rather than doing these calculations on our own, we can read the help

file for the hist() function. The argument

breaks allow us to specify where the breaks in the

histogram - the splits in bins - should occur.

We can see that the default value is “Sturges”. And under details we

can see the other options that hist() can work with,

“Scott” and “FD” for Freedman-Diaconis. We also get a hint of the

functions that can return the number of bins:

R

nclass.Sturges(penguins$bill_length_mm)

OUTPUT

[1] 10nclass.FD and nclass.scott works similarly,

but note that it is necessary to remove missing values. If one of the

other heuristics is needed, we either need to do the calculations

ourself - or try to identify a package that contains functions to do

it.

Another way

With these heuristics, we can calculate the recommended number of bins for making a histogram. And with that number, knowing the range of the data, we can calculate the binwidth.

Either of those can be used as an argument in

geom_histogram(), bins= and

binwidth= respectively.

However, for effective communication, we might consider a third way. The bill length of the penguins ranges from 32.1 to 59.6 mm. With 9 bins we will get a bin width of 3.055556 mm. The first bin will therefore start at 32.1 and end at 35.15556 mm, and the second will start at 35.15556 mm and end at 38.21112. These are the breaks, and they are not very intuitive.

Instead we might consider choosing the breaks ourself. Having a first bin that starts at 32 mm and ends at 35, and the a second bin that starts at 35 mm and ends at 38 might be beneficial for understanding the histogram.

Those breaks can also be provide to the geom_histogram()

function - we just need to calculate them our self.

The seq() function is useful for this.

This function returns a sequence of numbers from a start value, to and end value, in steps that we can chose:

R

seq(from = 30, to = 65, by = 5)

OUTPUT

[1] 30 35 40 45 50 55 60 65You will have to think about the from and the

to arguments, to not exclude any observations, but this

method give us much more natural breaks in the histogram:

R

penguins |>

ggplot(aes(x=bill_length_mm)) +

geom_histogram(breaks = seq(from = 30, to = 65, by = 5))

WARNING

Warning: Removed 2 rows containing non-finite outside the scale range

(`stat_bin()`).

Do not abuse the option of specifying breaks!

Instead of using the seq() function we could construct

the breaks by hand:

R

c(30, 35, 40, 45, 50, 55, 60, 65)

And it might be tempting enter breaks that would lead to uneven binwidths:

R

c(30, 40, 42, 45, 50, 55, 60, 65)

Do not do this. This will, except in very rare cases, rightfully be considered misleading. Always use a consistent binwidth!

- Histograms are used for visualising the distribution of data

- A lot of different rules for chosing number of bins exists

- Binwidth and number of bins are equivalent

- Chose the number of bins that best supports your data story. Without hiding inconvenient truths about your data.

- Never use unequal binwidths

- Consider using natural breaks as an alternative

Content from Table One

Last updated on 2026-02-03 | Edit this page

Estimated time: 12 minutes

Overview

Questions

- How do you make a Table One?

Objectives

- Explain what a Table One is

- Know how to make a Tabel One and adjust key parameters

What is a “Table One”?

Primarily used in medical and epidemiological research, a Table One is typically the first table in any publication using data.

It presents the baseline characteristics of the participants in a study, and provides a concise overview of the relevant demographic and clinical variables.

It typically compares different groups (male~female, treatment~control), to highlight similarities and differences.

It can look like this:

|

control

|

case

|

Overall

|

||||

|---|---|---|---|---|---|---|

|

no (N=298) |

yes (N=48) |

no (N=135) |

yes (N=29) |

no (N=433) |

yes (N=77) |

|

| Age (years) | ||||||

| Mean (SD) | 61.3 (4.75) | 58.9 (5.68) | 61.5 (4.85) | 58.1 (5.32) | 61.4 (4.78) | 58.6 (5.53) |

| Median [Min, Max] | 62.0 [46.0, 69.0] | 59.0 [46.0, 68.0] | 62.0 [45.0, 69.0] | 58.0 [49.0, 68.0] | 62.0 [45.0, 69.0] | 58.0 [46.0, 68.0] |

| testost | ||||||

| Mean (SD) | 25.3 (13.2) | 22.2 (10.7) | 27.6 (16.1) | 28.2 (15.6) | 26.0 (14.2) | 24.4 (13.0) |

| Median [Min, Max] | 23.0 [4.00, 111] | 21.5 [8.00, 63.0] | 25.0 [6.00, 144] | 24.0 [10.0, 69.0] | 23.0 [4.00, 144] | 22.0 [8.00, 69.0] |

| Missing | 6 (2.0%) | 2 (4.2%) | 3 (2.2%) | 1 (3.4%) | 9 (2.1%) | 3 (3.9%) |

| prolactn | ||||||

| Mean (SD) | 9.60 (5.10) | 13.7 (12.3) | 10.8 (6.79) | 9.57 (3.29) | 9.99 (5.70) | 12.2 (10.1) |

| Median [Min, Max] | 8.16 [1.96, 37.3] | 8.81 [3.87, 55.8] | 9.30 [2.66, 59.9] | 8.88 [4.49, 17.6] | 8.64 [1.96, 59.9] | 8.84 [3.87, 55.8] |

| Missing | 14 (4.7%) | 0 (0%) | 6 (4.4%) | 1 (3.4%) | 20 (4.6%) | 1 (1.3%) |

Please note that the automatic styling of this site results in a table-one that is not very nice looking.

We have 510 participants in a study, split into control and case groups, and further subdivided into two groups based on PostMenopausal Hormone use. It describes the distribution of sex and concentration of testosterone and prolactin in a blood sample.

How do we make that?

Structuring the data

Most things in R are simple to do (but rarely simple to understand) when the data has the correct structure.

If we follow the general rules of thumb for tidy data, we are off to a good start. This is the structure of the data set we are working with here - after we have made some modifications regarding labels, levels and units.

R

head(blood)

OUTPUT

# A tibble: 6 × 8

ID matchid case curpmh ageblood estradol testost prolactn

<dbl> <dbl> <fct> <fct> <dbl> <dbl> <dbl> <dbl>

1 100013 164594 control yes 46 57 25 11.1

2 100241 107261 control no 65 11 NA 2.8

3 100696 110294 control yes 66 3 8 38

4 101266 101266 case no 57 4 6 8.9

5 101600 101600 case no 66 6 25 6.9

6 102228 155717 control yes 57 10 31 13.9The important thing to note is that when we stratify the summary statistics by some variable, this variable have to be a categorical variable. The variables we want to do summary statistics on also have to have the correct type. Are the values categorical, the column in the dataframe have to actually be categorical. Are they numeric, the data type have to be numeric.

And having the data - how do we actually do it?

A number of packages making it easy to make a Table One exists. Here

we look at the package table1.

The specific way of doing it depends on the data available. If we do not have data on the weight of the participants, we are not able to describe the distribution of their weight.

Let us begin by looking at the data. We begin by loading the two

packages tidyverse and table1. We then read in

the data from the csv-file “BLOOD.csv”, which we have downloaded

from this link.

R

library(tidyverse)

library(table1)

blood <- read_csv("data/BLOOD.csv")

head(blood)

OUTPUT

# A tibble: 6 × 9

ID matchid case curpmh ageblood estradol estrone testost prolactn

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 100013 164594 0 1 46 57 65 25 11.1

2 100241 107261 0 0 65 11 26 999 2.8

3 100696 110294 0 1 66 3 999 8 38

4 101266 101266 1 0 57 4 18 6 8.9

5 101600 101600 1 0 66 6 18 25 6.9

6 102228 155717 0 1 57 10 999 31 13.9510 rows. Its a case-control study, where the ID represents one individual, and matchid gives us the link between cases and controls. Ageblood is the age of the individual at the time when the blood sample was drawn, and we then have levels of four different hormones.

The data contains missing values, coded as “999.0” for estrone and testost, and 99.99 for prolactin.

Let us fix that:

R

blood <- blood |>

mutate(estrone = na_if(estrone, 999.0)) |>

mutate(testost = na_if(testost, 999.0)) |>

mutate(prolactn = na_if(prolactn, 99.99))

We then ensure that categorical values are stored as categorical values, and adjust the labels of those categorical values:

R

blood <- blood |>

mutate(case = factor(case, labels = c("control", "case"))) |>

mutate(curpmh = factor(curpmh, labels = c("no", "yes")))

And now we can make our table one like this. Note that we only include testosterone and prolactin, in order to get a more manageble table 1:

R

table1(~ageblood + testost + prolactn|case + curpmh, data = blood)

|

control

|

case

|

Overall

|

||||

|---|---|---|---|---|---|---|

|

no (N=298) |

yes (N=48) |

no (N=135) |

yes (N=29) |

no (N=433) |

yes (N=77) |

|

| ageblood | ||||||

| Mean (SD) | 61.3 (4.75) | 58.9 (5.68) | 61.5 (4.85) | 58.1 (5.32) | 61.4 (4.78) | 58.6 (5.53) |

| Median [Min, Max] | 62.0 [46.0, 69.0] | 59.0 [46.0, 68.0] | 62.0 [45.0, 69.0] | 58.0 [49.0, 68.0] | 62.0 [45.0, 69.0] | 58.0 [46.0, 68.0] |

| testost | ||||||

| Mean (SD) | 25.3 (13.2) | 22.2 (10.7) | 27.6 (16.1) | 28.2 (15.6) | 26.0 (14.2) | 24.4 (13.0) |

| Median [Min, Max] | 23.0 [4.00, 111] | 21.5 [8.00, 63.0] | 25.0 [6.00, 144] | 24.0 [10.0, 69.0] | 23.0 [4.00, 144] | 22.0 [8.00, 69.0] |

| Missing | 6 (2.0%) | 2 (4.2%) | 3 (2.2%) | 1 (3.4%) | 9 (2.1%) | 3 (3.9%) |

| prolactn | ||||||

| Mean (SD) | 9.60 (5.10) | 13.7 (12.3) | 10.8 (6.79) | 9.57 (3.29) | 9.99 (5.70) | 12.2 (10.1) |

| Median [Min, Max] | 8.16 [1.96, 37.3] | 8.81 [3.87, 55.8] | 9.30 [2.66, 59.9] | 8.88 [4.49, 17.6] | 8.64 [1.96, 59.9] | 8.84 [3.87, 55.8] |

| Missing | 14 (4.7%) | 0 (0%) | 6 (4.4%) | 1 (3.4%) | 20 (4.6%) | 1 (1.3%) |

It is a good idea, and increases readability, to add labels and units

to the variables. The table1 package provides functions for

that:

R

label(blood$curpmh) <- "current_pmh"

label(blood$case) <- "case_control"

label(blood$ageblood) <- "Age"

units(blood$ageblood) <- "years"

This will add labels to the plot, and allow us to give the data more meaningful names and units without changing the date it self. This looks nicer, and is easier to read:

R

table1(~ageblood + testost + prolactn|case + curpmh, data = blood)

|

control

|

case

|

Overall

|

||||

|---|---|---|---|---|---|---|

|

no (N=298) |

yes (N=48) |

no (N=135) |

yes (N=29) |

no (N=433) |

yes (N=77) |

|

| Age (years) | ||||||

| Mean (SD) | 61.3 (4.75) | 58.9 (5.68) | 61.5 (4.85) | 58.1 (5.32) | 61.4 (4.78) | 58.6 (5.53) |

| Median [Min, Max] | 62.0 [46.0, 69.0] | 59.0 [46.0, 68.0] | 62.0 [45.0, 69.0] | 58.0 [49.0, 68.0] | 62.0 [45.0, 69.0] | 58.0 [46.0, 68.0] |

| testost | ||||||

| Mean (SD) | 25.3 (13.2) | 22.2 (10.7) | 27.6 (16.1) | 28.2 (15.6) | 26.0 (14.2) | 24.4 (13.0) |

| Median [Min, Max] | 23.0 [4.00, 111] | 21.5 [8.00, 63.0] | 25.0 [6.00, 144] | 24.0 [10.0, 69.0] | 23.0 [4.00, 144] | 22.0 [8.00, 69.0] |

| Missing | 6 (2.0%) | 2 (4.2%) | 3 (2.2%) | 1 (3.4%) | 9 (2.1%) | 3 (3.9%) |

| prolactn | ||||||

| Mean (SD) | 9.60 (5.10) | 13.7 (12.3) | 10.8 (6.79) | 9.57 (3.29) | 9.99 (5.70) | 12.2 (10.1) |

| Median [Min, Max] | 8.16 [1.96, 37.3] | 8.81 [3.87, 55.8] | 9.30 [2.66, 59.9] | 8.88 [4.49, 17.6] | 8.64 [1.96, 59.9] | 8.84 [3.87, 55.8] |

| Missing | 14 (4.7%) | 0 (0%) | 6 (4.4%) | 1 (3.4%) | 20 (4.6%) | 1 (1.3%) |

More advanced stuff

We might want to be able to precisely control the summary statistics presented in the table.

We can do that by specifying input to the arguments

render.continuous and render.categorical that

control how continuous and categorical data respectively, is shown in

the table.

The simple way of doing that is by using abbrevieated function names. We only include testosterone and prolactin in the the table to save space:

R

table1(~ageblood + testost + prolactn|case + curpmh, data = blood,

render.continuous=c(.="Mean (SD%)", .="Median [Min, Max]",

"Geom. mean (Geo. SD%)"="GMEAN (GSD%)"))

|

control

|

case

|

Overall

|

||||

|---|---|---|---|---|---|---|

|

no (N=298) |

yes (N=48) |

no (N=135) |

yes (N=29) |

no (N=433) |

yes (N=77) |

|

| Age (years) | ||||||

| Mean (SD%) | 61.3 (4.75%) | 58.9 (5.68%) | 61.5 (4.85%) | 58.1 (5.32%) | 61.4 (4.78%) | 58.6 (5.53%) |

| Median [Min, Max] | 62.0 [46.0, 69.0] | 59.0 [46.0, 68.0] | 62.0 [45.0, 69.0] | 58.0 [49.0, 68.0] | 62.0 [45.0, 69.0] | 58.0 [46.0, 68.0] |

| Geom. mean (Geo. SD%) | 61.1 (1.08%) | 58.7 (1.10%) | 61.3 (1.08%) | 57.9 (1.10%) | 61.2 (1.08%) | 58.4 (1.10%) |

| testost | ||||||

| Mean (SD%) | 25.3 (13.2%) | 22.2 (10.7%) | 27.6 (16.1%) | 28.2 (15.6%) | 26.0 (14.2%) | 24.4 (13.0%) |

| Median [Min, Max] | 23.0 [4.00, 111] | 21.5 [8.00, 63.0] | 25.0 [6.00, 144] | 24.0 [10.0, 69.0] | 23.0 [4.00, 144] | 22.0 [8.00, 69.0] |

| Geom. mean (Geo. SD%) | 22.4 (1.65%) | 20.0 (1.58%) | 24.6 (1.60%) | 24.6 (1.69%) | 23.1 (1.64%) | 21.6 (1.63%) |

| Missing | 6 (2.0%) | 2 (4.2%) | 3 (2.2%) | 1 (3.4%) | 9 (2.1%) | 3 (3.9%) |

| prolactn | ||||||

| Mean (SD%) | 9.60 (5.10%) | 13.7 (12.3%) | 10.8 (6.79%) | 9.57 (3.29%) | 9.99 (5.70%) | 12.2 (10.1%) |

| Median [Min, Max] | 8.16 [1.96, 37.3] | 8.81 [3.87, 55.8] | 9.30 [2.66, 59.9] | 8.88 [4.49, 17.6] | 8.64 [1.96, 59.9] | 8.84 [3.87, 55.8] |

| Geom. mean (Geo. SD%) | 8.59 (1.58%) | 10.7 (1.89%) | 9.63 (1.58%) | 9.05 (1.41%) | 8.90 (1.59%) | 10.1 (1.73%) |

| Missing | 14 (4.7%) | 0 (0%) | 6 (4.4%) | 1 (3.4%) | 20 (4.6%) | 1 (1.3%) |

table1 recognizes the following summary statisticis: N,

NMISS, MEAN, SD, CV, GMEAN, GCV, MEDIAN, MIN, MAX, IQR, Q1, Q2, Q3, T1,

T2, FREQ, PCT

Details can be found in the help to the function

stats.default()

Note that they are case-insensitive, and we can write Median or mediAn instead of median.

Also note that we write .="Mean (SD%)" which will be

recognized as the functions mean() and sd(),

but also that the label shown should be “Mean (SD%)”.

If we want to specify the label, we can write

"Geom. mean (Geo. SD%)"="GMEAN (GSD%)"

Change the labels

We have two unusual values in this table - geometric mean and geometric standard deviation. Change the code to write out “Geom.” and “geo.” as geometric.

R

table1(~ageblood + testost + prolactn |case + curpmh, data = blood,

render.continuous=c(.="Mean (SD%)", .="Median [Min, Max]",

"Geometric mean (Geometric SD%)"="GMEAN (GSD%)"))

The geometric mean of two numbers is the squareroot of the product of the two numbers. If we have three numbers, we take the cube root of the product. In general:

\[\left( \prod_{i=1}^{n} x_i \right)^{\frac{1}{n}}\]

The geometric standard deviation is defined by: \[ \exp\left(\sqrt{\frac{1}{n} \sum_{i=1}^{n} \left( \log x_i - \frac{1}{n} \sum_{j=1}^{n} \log x_j \right)^2}\right)\]

Very advanced stuff

If we want to specify the summary statistics very precisely, we have to define a function ourself:

R

my_summary <- function(x){

c("","Median" = sprintf("%.3f", median(x, na.rm = TRUE)),

"Variance" = sprintf("%.1f", var(x, na.rm=TRUE)))

}

table1(~ageblood + testost + prolactn|case + curpmh, data = blood,

render.continuous = my_summary)

|

control

|

case

|

Overall

|

||||

|---|---|---|---|---|---|---|

|

no (N=298) |

yes (N=48) |

no (N=135) |

yes (N=29) |

no (N=433) |

yes (N=77) |

|

| Age (years) | ||||||

| Median | 62.000 | 59.000 | 62.000 | 58.000 | 62.000 | 58.000 |

| Variance | 22.6 | 32.3 | 23.5 | 28.3 | 22.8 | 30.6 |

| testost | ||||||

| Median | 23.000 | 21.500 | 25.000 | 24.000 | 23.000 | 22.000 |

| Variance | 173.6 | 115.0 | 257.7 | 241.9 | 200.4 | 169.0 |

| Missing | 6 (2.0%) | 2 (4.2%) | 3 (2.2%) | 1 (3.4%) | 9 (2.1%) | 3 (3.9%) |

| prolactn | ||||||

| Median | 8.155 | 8.805 | 9.300 | 8.880 | 8.640 | 8.835 |

| Variance | 26.1 | 151.3 | 46.1 | 10.8 | 32.5 | 102.8 |

| Missing | 14 (4.7%) | 0 (0%) | 6 (4.4%) | 1 (3.4%) | 20 (4.6%) | 1 (1.3%) |

We do not need to use the sprintf() function,

but it is a very neat way of combining text with numeric variables

because it allows us to format them directly.

Summary statistics for categorical data can be adjusted similarly, by

specifying render.categorical.

What does %.3f actually do?

Can you guess what the formatting in ´sprintf´ does?

Try to change “%.3f” in the function to “%.2f”.

R

my_summary <- function(x){

c("","Median" = sprintf("%.3f", median(x, na.rm = TRUE)),

"Variance" = sprintf("%.1f", var(x, na.rm=TRUE)))

}

sprintf uses a bit of an arcane way of specifying the

way numbers should be formatted when we combine them with text. The

“%”-sign specifies that “this is where we place the number in the

function”. “.3f” specifies that we are treating the number as a floating

point number (which is just a fancy way of saying that it is a decimal

number), and that we would like three digits after the decimal

point.

Whats up with that blank line?

Note that in the function, we define a vector as output, with three elements:

R

my_summary <- function(x){

c("",

"Median" = sprintf("%.3f", median(x, na.rm = TRUE)),

"Variance" = sprintf("%.1f", var(x, na.rm=TRUE)))

}

Calculating and formatting the median and the varianse is pretty straightforward.

But the first element is an empty string. Whats up with that?

Try to remove the empty string from the function, and use it is a table one as previously shown:

R

my_summary <- function(x){

c("Median" = sprintf("%.3f", median(x, na.rm = TRUE)),

"Variance" = sprintf("%.1f", var(x, na.rm=TRUE)))

}

table1(~ageblood + testost + prolactn|case + curpmh, data = blood,

render.continuous = my_summary)

The line beginning with “Median” does not show up, but the median value is shown next to the “Age” and “Weight” lines.

Primarily of use if there are medical students on the course

- A Table One provides a compact describtion of the data we are working with

- With a little bit of work we can control the content of the table.

Content from Table One - gt

Last updated on 2026-02-03 | Edit this page

Estimated time: 12 minutes

Overview

Questions

- How do you make a Table One?

- How do you make a Table One that is easy to configure?

Objectives

- Explain what a Table One is

- Know how to make a Tabel One and adjust key parameters

What is a “Table One”?

Primarily used in medical and epidemiological research, a Table One is typically the first table in any publication using data.

It presents the baseline characteristics of the participants in a study, and provides a concise overview of the relevant demographic and clinical variables.

It typically compares different groups (male~female, treatment~control), to highlight similarities and differences.

It can look like this:

|

control (N=346) |

case (N=164) |

Overall (N=510) |

|

|---|---|---|---|

| Age (years) | |||

| Mean (SD) | 61.0 (4.95) | 60.9 (5.09) | 61.0 (4.99) |

| Median [Min, Max] | 62.0 [46.0, 69.0] | 62.0 [45.0, 69.0] | 62.0 [45.0, 69.0] |

| testost | |||

| Mean (SD) | 24.9 (12.9) | 27.7 (15.9) | 25.8 (14.0) |

| Median [Min, Max] | 22.0 [4.00, 111] | 25.0 [6.00, 144] | 23.0 [4.00, 144] |

| Missing | 8 (2.3%) | 4 (2.4%) | 12 (2.4%) |

| prolactn | |||

| Mean (SD) | 10.2 (6.77) | 10.6 (6.32) | 10.3 (6.63) |

| Median [Min, Max] | 8.27 [1.96, 55.8] | 9.18 [2.66, 59.9] | 8.67 [1.96, 59.9] |

| Missing | 14 (4.0%) | 7 (4.3%) | 21 (4.1%) |

R

tbl_summary(blood,

by = case,

type = all_continuous() ~ "continuous2", # for at få multilinie summary stats

include = c(ageblood, testost, curpmh),

statistic = all_continuous() ~ c(

"{mean} ({min}, {max})",

"{median} ({p25}, {p75})"

)

) |>

add_overall(last = TRUE)

| Characteristic |

control N = 3461 |

case N = 1641 |

Overall N = 5101 |

|---|---|---|---|

| Age |

|

|

|

| Mean (Min, Max) | 61.0 (46.0, 69.0) | 60.9 (45.0, 69.0) | 61.0 (45.0, 69.0) |

| Median (Q1, Q3) | 62.0 (57.0, 65.0) | 62.0 (57.0, 65.0) | 62.0 (57.0, 65.0) |

| testost |

|

|

|

| Mean (Min, Max) | 25 (4, 111) | 28 (6, 144) | 26 (4, 144) |

| Median (Q1, Q3) | 22 (16, 31) | 25 (19, 33) | 23 (17, 31) |

| Unknown | 8 | 4 | 12 |

| current_pmh | 48 (14%) | 29 (18%) | 77 (15%) |

| 1 n (%) | |||

Og det gør vi så med gt i stedet. er der lettere måder? Ja, det er der. Link til lettere måde. Men! det her giver os ret omfattende muligheder for at tilpasse tabellen.

Herunder er vi ikke helt i mål endnu. Men vi er ret tæt.

R

library(dplyr)

library(gtsummary)

names(blood)

OUTPUT

[1] "ID" "matchid" "case" "curpmh" "ageblood" "estradol" "testost"

[8] "prolactn"R

# grunddata

base <- blood |>

select(ageblood, grade, stage, trt) |>

mutate(grade = paste("Grade", grade))

ERROR

Error in `select()`:

! Can't select columns that don't exist.

✖ Column `grade` doesn't exist.R

# rækkefølge på paneler: Overall først, derefter Grade I/II/III

lvl <- c("Overall", paste("Grade", levels(trial$grade)))

# lav et samlet datasæt med et ekstra "Overall"-panel

df <- bind_rows(

base |> mutate(.panel = "Overall"),

base |> mutate(.panel = grade)

) |>

mutate(.panel = factor(.panel, levels = lvl))

ERROR

Error:

! object 'base' not foundR

# tabel: tre strata + et overall-stratum

tbl <- df |>

tbl_strata(

strata = .panel,

.tbl_fun = ~ .x |>

select(-grade) |>

tbl_summary(by = trt, missing = "no") |>

add_n(),

.header = "**{strata}**, N = {n}"

)

ERROR

Error in `tbl_strata()`:

! The `data` argument must be class <data.frame/survey.design>, not a

function.R

tbl

OUTPUT

function (src, ...)

{

UseMethod("tbl")

}

<bytecode: 0x562a635ecad8>

<environment: namespace:dplyr>Primarily of use if there are medical students on the course

- A Table One provides a compact describtion of the data we are working with

- With a little bit of work we can control the content of the table.

Content from Tidy Data

Last updated on 2026-02-03 | Edit this page

Estimated time: 12 minutes

Overview

Questions

- How do we structure our data best?

Objectives

- Explain what tidy data is

Introduction

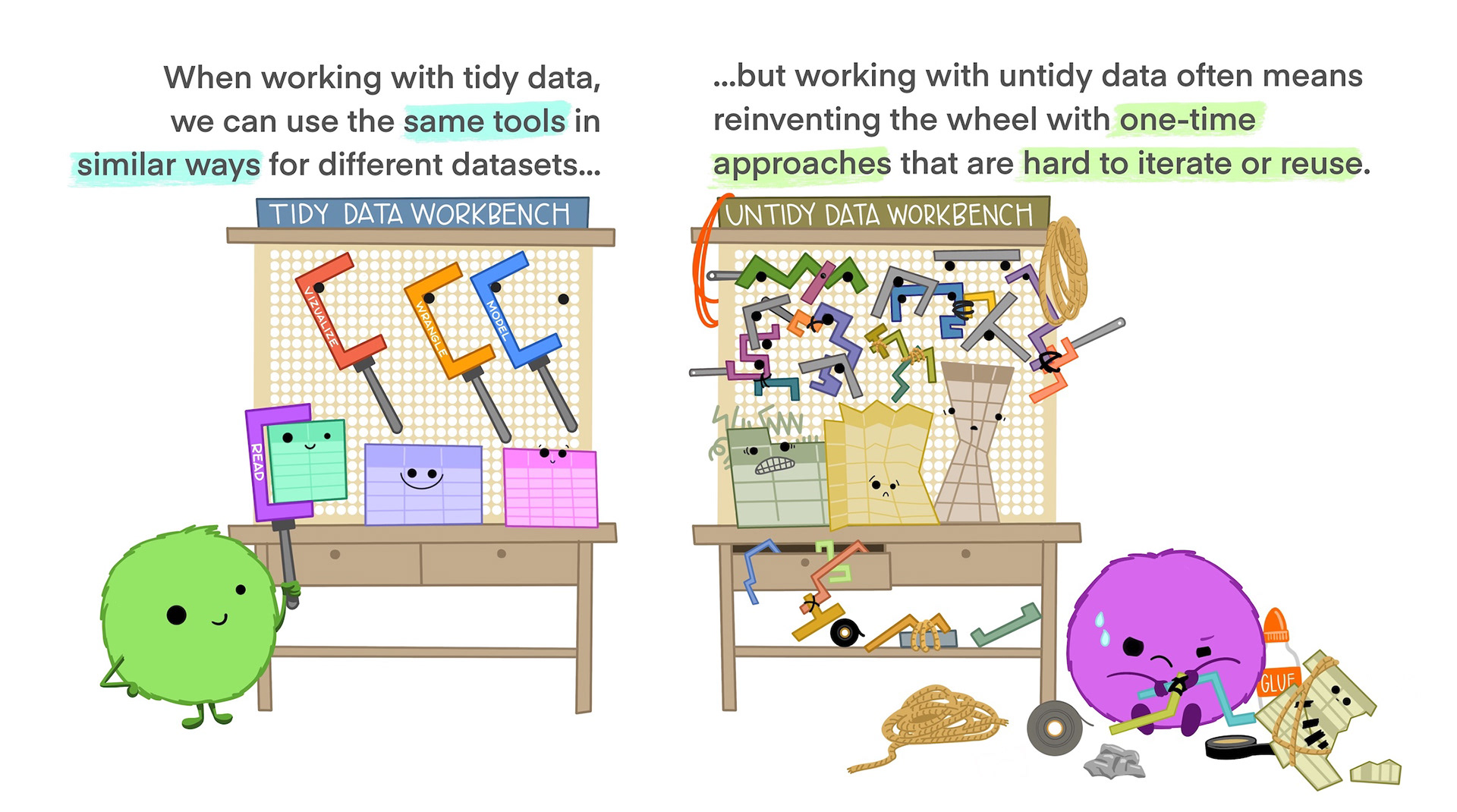

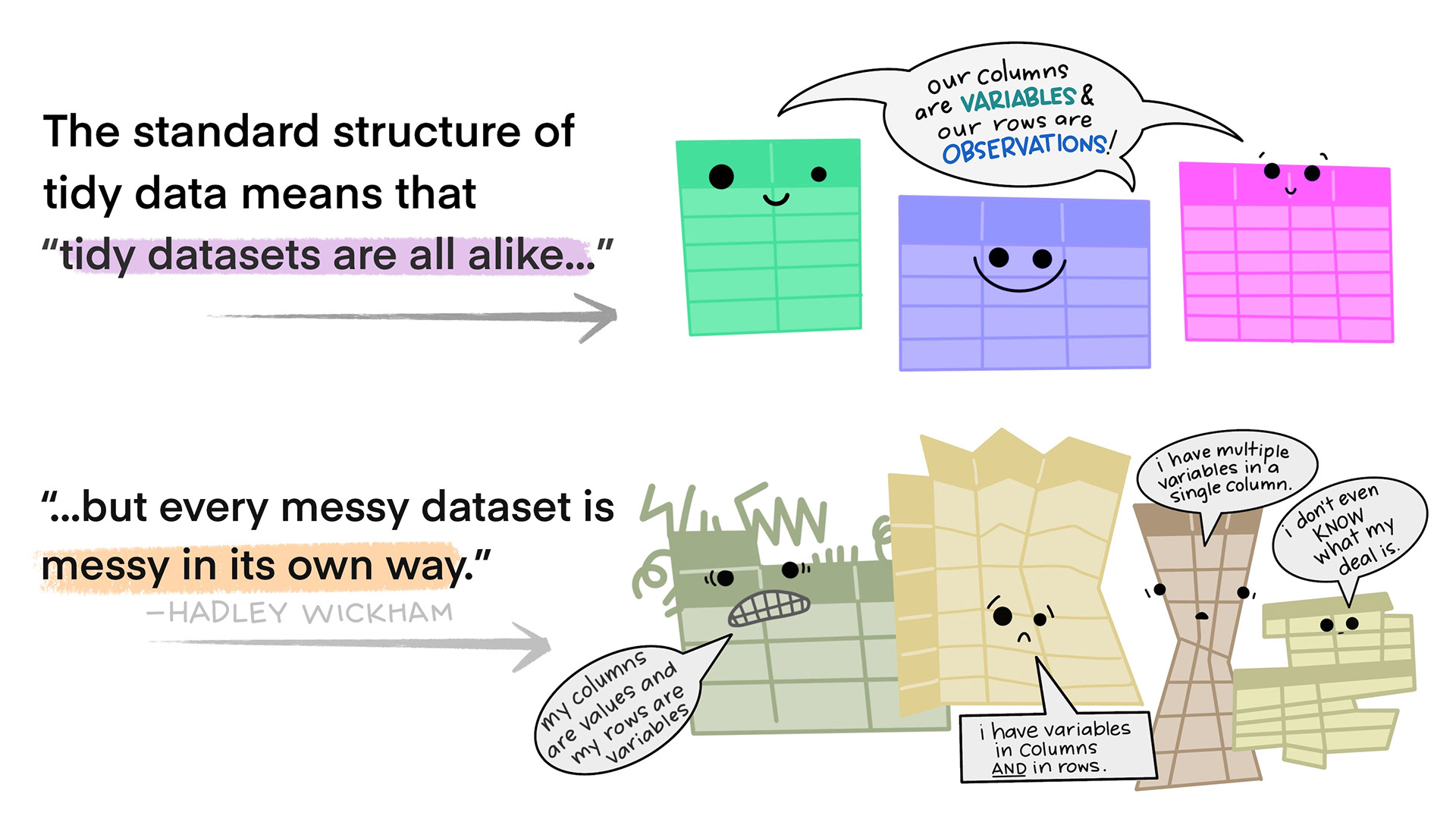

Most of what we want to do with our data is relatively simple. If the data is structured in the right way.

Working within the paradigm of tidyverse it is

preferable if the data is tidy.

Tidy data is not the opposite of messy data. Data can be nice and well structured, tidy as in non-messy, without being tidy in the way we understand it in this context.

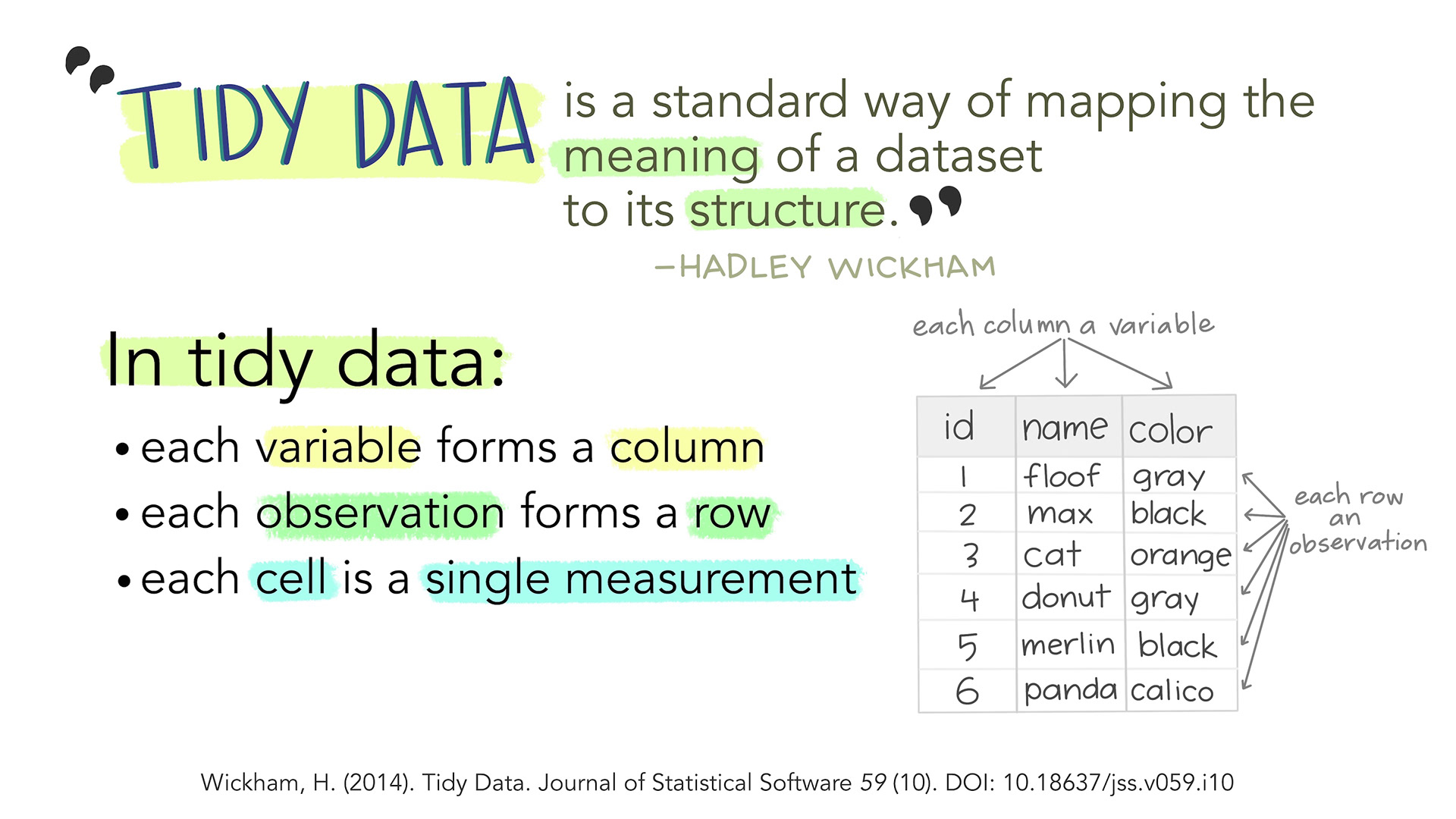

Tidy data in the world of R, especially the dialect of R we call tidyverse, are characterized by:

- Each variable is a column; each column is a variable.

- Each observation is a row; each row is an observation.

- Each value is a cell; each cell is a single value.

This way of structuring our data is useful not only in R, but also in other software packages.

An examples

This is an example of untidy data, on new cases of tubercolosis in Afghanistan. It is well structured, however there are information in the column names.

“new_sp_m014” describes “new” cases. Diagnosed with the “sp” method (culturing a sample of sputum and identifying the presence of Mycobacterium Tuberculosis bacteria). In “m” meaning males, between the ages of 0 and 14.

Picking out information on all new cases eg. distribution between the two sexes is difficult. Similar problems arise if we want to follow the total number of new cases.

OUTPUT

# A tibble: 10 × 6

country year new_sp_m014 new_sp_m1524 new_sp_m2534 new_sp_m3544

<chr> <dbl> <dbl> <dbl> <dbl> <dbl>

1 Afghanistan 2000 52 228 183 149

2 Afghanistan 2001 129 379 349 274

3 Afghanistan 2002 90 476 481 368

4 Afghanistan 2003 127 511 436 284

5 Afghanistan 2004 139 537 568 360

6 Afghanistan 2005 151 606 560 472

7 Afghanistan 2006 193 837 791 574

8 Afghanistan 2007 186 856 840 597

9 Afghanistan 2008 187 941 773 545

10 Afghanistan 2009 200 906 705 499Getting this data on a tidy format is not trivial, but a resulting, tidy, organised dataset would look something like this:

OUTPUT

# A tibble: 40 × 7

country year new method sex age_group cases

<fct> <dbl> <fct> <fct> <fct> <fct> <dbl>

1 Afghanistan 2000 new sp m 0-14 52

2 Afghanistan 2000 new sp m 15-24 228

3 Afghanistan 2000 new sp m 25-34 183

4 Afghanistan 2000 new sp m 35-44 149

5 Afghanistan 2001 new sp m 0-14 129

6 Afghanistan 2001 new sp m 15-24 379

7 Afghanistan 2001 new sp m 25-34 349

8 Afghanistan 2001 new sp m 35-44 274

9 Afghanistan 2002 new sp m 0-14 90

10 Afghanistan 2002 new sp m 15-24 476

# ℹ 30 more rowsThe fact that we are recording “new” cases is now a variable in it self. The method used is also a variable, and the categorical variabel sex is similarly a separate variable as is the age group.

The variables new, method and

sex might appear redundant - all values are identical,

however the entire dataset contains data on non-new cases, other methods

for diagnosis and the other sex, recorded as “f”.

Do’s and dont’s in Excel

Excel is a very useful tool, especially for collecting data.

But even though we are able to do everything we can do in R, in Excel, we will normally do the main part of our work with data in R.

It is therefor a very good idea to think about how we collect and organise the data in Excel, to make our life easier later on.

We have collected some good rules of thumb for structuring data in Excel, based on time-consuming and traumatic experiences wrangling data from Excel to R.

Always

- Use one column for one variable

- Use one row for one observation

- Use one cell for one value

- Begin your data in the upper left corner (cell A1)

- Use one sheet for each type of data

Never (EVER!)

- Modify your raw data - always make a copy before making any change

- Merge cells

- Use colours for information

Illustrations from the Openscapes blog Tidy Data for reproducibility, efficiency, and collaboration by Julia Lowndes and Allison Horst

- tidy data provides a consistent way of organizing data

Content from The normal distribution

Last updated on 2026-02-03 | Edit this page

Estimated time: 12 minutes

Overview

Questions

- What even is a normal distribution?

Objectives

- Explain how to use markdown with the new lesson template

- Demonstrate how to include pieces of code, figures, and nested challenge blocks

Inline instructor notes can help inform instructors of timing challenges associated with the lessons. They appear in the “Instructor View”

What is the normal distribution

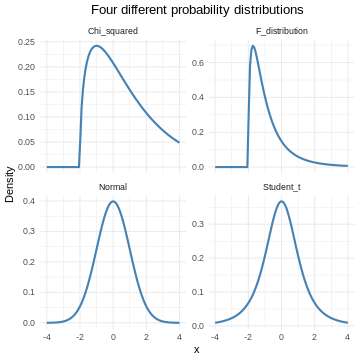

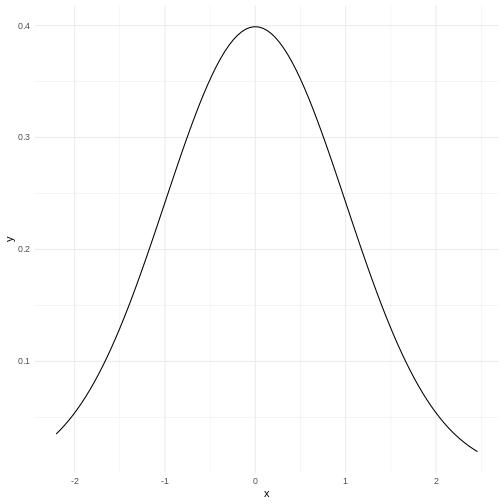

A probability distribution is a mathematical function, that describes the likelihood of different outcomes in a random experiment. It gives us probabilities for all possible outcomes, and is normalised so that the sum of all the probabilities is 1.

Probability distributions can be discrete, or they can be continuous. The normal distribution is just one of several different continuous probability distributions.

The normal distribution is especially important, for a number of reasons:

If we take a lot of samples from a population and calculate the averages of a given variable in those samples, the averages, or means will be normally distributed. This is know as the Central Limit Theorem.

Many natural (and human made) processes follow a normal distribution.

The normal distribution have useful mathematical properties. It might not appear to be simple working with the normal distribution. But the alternative is worse.

Many statistical methods and tests are based on assumptions of normality.

How does it look - mathematically?

The normal distribution follows this formula: